本指南简要介绍了如何使用 Unity 为 Android XR 进行开发。Android XR 可与您熟悉的 Unity 工具和功能搭配使用,并且由于 Unity 的 Android XR 支持基于 OpenXR 构建,因此 OpenXR 概览中描述的许多功能在 Unity 中也受支持。

按照本指南了解以下内容:

- Unity 对 Android XR 的支持

- Unity XR 基础知识

- 为 Android XR 开发和发布应用

- 适用于 Android XR 的 Unity 软件包

- Unity OpenXR:Android XR 软件包

- 适用于 Unity 的 Android XR 扩展

- 功能和兼容性注意事项

- 输入和互动

Unity 对 Android XR 的支持

为 Android XR 构建 Unity 应用时,您可以利用最新版 Unity 6 中的混合现实工具和功能。其中包括使用 XR Interaction Toolkit、AR Foundation 和 OpenXR Plugin 的混合现实模板,可帮助您快速入门。使用 Unity 为 Android XR 构建应用时,我们建议您使用通用渲染管线 (URP) 作为渲染管线,并使用 Vulkan 作为图形 API。借助这些功能,您可以充分利用 Unity 的一些图形功能,而这些功能仅在 Vulkan 中受支持。如需详细了解如何配置这些设置,请参阅项目设置指南。

Unity XR 基础知识

如果您是 Unity 或 XR 开发新手,可以参阅 Unity 的 XR 手册,了解基本的 XR 概念和工作流程。XR 手册包含以下方面的信息:

- XR 提供程序插件,包括 Unity OpenXR:Android XR 和适用于 Unity 的 Android XR 扩展程序

- XR 支持软件包,以添加其他应用级功能

- XR 架构指南,其中介绍了 Unity XR 技术堆栈和 XR 子系统

- XR 项目设置

- 构建和运行 XR 应用

- XR 图形指南,包括通用渲染管线、立体渲染、注视点渲染、多视图渲染区域和 VR 帧计时

- XR 音频指南,包括对音频空间化器的支持

开发和发布 Android 应用

Unity 提供了有关针对 Android 进行开发、构建和发布的深入文档,涵盖的主题包括 Unity 中的 Android 权限、Android Build Settings、针对 Android 构建应用和向 Google Play 交付。

适用于 Android XR 的 Unity 软件包

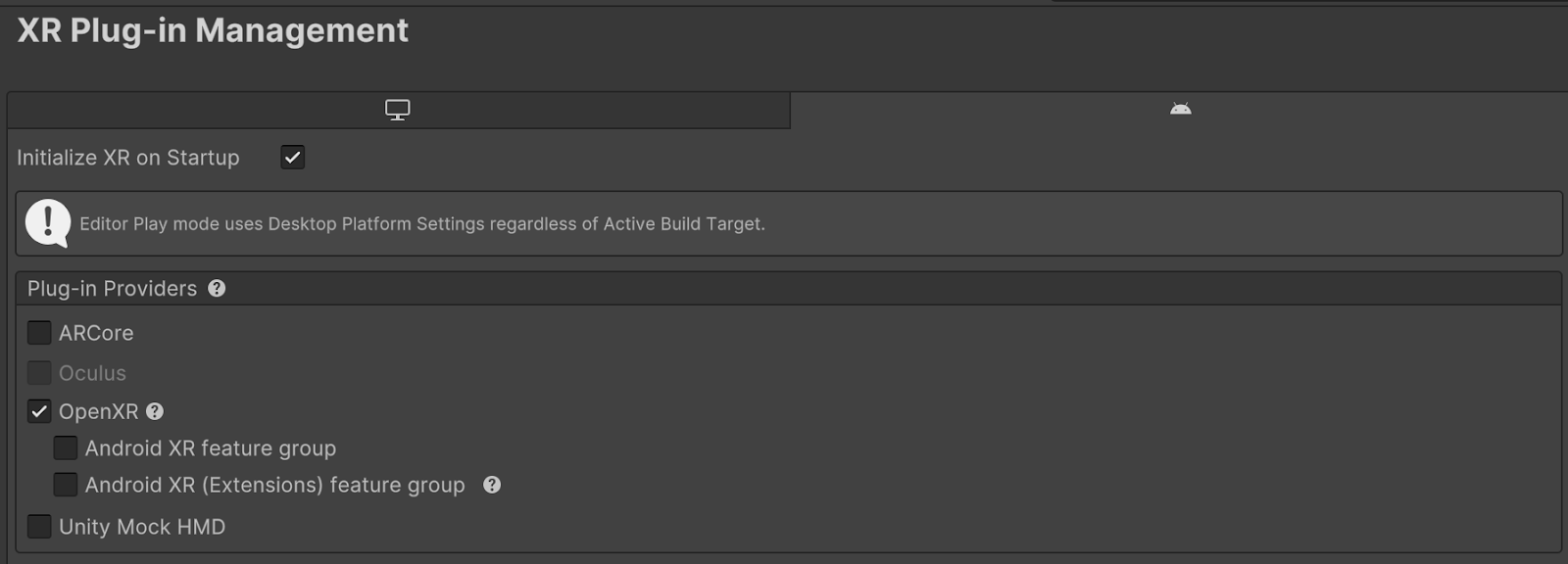

有两个软件包可用于构建适用于 Android XR 的 Unity 应用。这两个软件包都是 XR 提供程序插件,可以通过 Unity 的 XR 插件管理软件包启用。XR 插件管理器添加了项目设置,用于管理和提供有关加载、初始化、设置和构建 XR 插件支持的帮助。如需允许应用在运行时执行 OpenXR 功能,必须通过插件管理器启用这些功能。

此图片显示了一个示例,说明了您可以通过 Unity 的编辑器在何处启用这些功能组。

Unity OpenXR Android XR

Unity OpenXR Android XR 软件包是一个 XR 插件,用于为 Unity 添加 Android XR 支持。此 XR 插件为 Unity 提供大部分 Android XR 支持,并为 AR Foundation 项目启用 Android XR 设备支持。AR Foundation 专为想要打造 AR 或混合现实体验的开发者而设计。它提供 AR 功能的接口,但本身不实现任何功能。Unity OpenXR Android XR 软件包提供了实现。如需开始使用此软件包,请查看包含“入门指南”的软件包手册。

适用于 Unity 的 Android XR 扩展

适用于 Unity 的 Android XR 扩展程序是对 Unity OpenXR Android XR 软件包的补充,其中包含有助于您打造沉浸式体验的其他功能。它可以单独使用,也可以与 Unity OpenXR Android XR 软件包一起使用。

如需开始使用此软件包,请按照我们的项目设置指南或有关导入 Android XR Extensions for Unity 的快速入门指南操作。

功能和兼容性注意事项

下表介绍了 Unity OpenXR:Android XR 软件包和 Android XR Extensions for Unity 软件包支持的功能,可用于确定哪个软件包包含所需的功能以及任何兼容性注意事项。

功能 |

Unity OpenXR:Android XR 功能字符串 |

Android XR Extensions for Unity 功能字符串 |

使用情形和预期行为 |

|---|---|---|---|

Android XR:AR 会话

|

Android XR(扩展程序):会话管理

|

如需使用任一软件包中的功能,您必须为相应软件包启用 AR 会话功能。您可以同时启用这两组功能;各个功能会相应地处理冲突。 |

|

不适用 |

不适用 |

设备跟踪用于跟踪设备在物理空间中的位置和旋转。XR Origin GameObject 会使用其 XROrigin 组件和包含相机及 TrackedPoseDriver 的 GameObject 层次结构,自动处理设备跟踪并将可跟踪对象转换为 Unity 的坐标系。 |

|

Android XR:AR 相机 |

不适用 |

此功能支持光照估计和全屏透视。 |

|

Android XR:AR 平面 |

Android XR(扩展):平面 |

这两个功能完全相同,请使用其中一个。Android XR(扩展程序):包含平面,以便开发者可以使用 Android XR(扩展程序):对象跟踪和持久锚点功能,而无需依赖于 Unity OpenXR Android XR 软件包。未来,Android XR(扩展程序):平面将移除,取而代之的是 Android XR:AR 锚点。 |

|

不适用 |

Android XR(扩展程序):对象跟踪 |

此功能支持检测和跟踪物理环境中的对象,并可与参考对象库结合使用。 |

|

Android XR:AR 面孔

|

Android XR:面部跟踪

|

通过 Android XR:AR Face 功能提供头像眼睛支持。通过 Android XR:面部跟踪功能访问用户的面部表情。这两项功能可以同时使用。 |

|

Android XR:AR 光线投射

|

不适用 |

借助此功能,您可以投射光线,并计算该光线与在物理环境中检测到的平面可跟踪对象或深度可跟踪对象之间的交点。 |

|

Android XR:AR 锚点 |

Android XR(扩展程序):锚点

|

这两项功能均支持空间锚点和平面锚点;请使用其中一项功能。对于持久锚点,请使用 Android XR(扩展):Anchor。未来,Android XR(扩展程序):锚点将被移除,所有锚点功能都将移至 Android XR:AR 锚点。 |

|

Android XR:AR 遮挡

|

不适用 |

遮挡功能可让应用中的混合现实内容看起来隐藏在或部分遮挡在物理环境中的对象后面。 |

|

效果指标 |

Android XR 性能指标 |

不适用 |

使用此功能可访问 Android XR 设备的性能指标。 |

合成层支持(需要 OpenXR 插件和 XR 合成层) |

Android XR:透视合成层

|

使用 Unity 的合成层支持功能创建基本的合成层(例如四边形、圆柱体、投影)。Android XR:直通合成层可用于创建具有自定义网格的直通层,从 Unity 的 GameObject 读取。 |

|

凹凸透镜渲染(需要 OpenXR 插件)

|

注视点渲染(旧版) |

通过降低用户周边视觉区域的分辨率,凹凸透镜渲染可加快渲染速度。Unity 的注视点渲染功能仅适用于使用 URP 和 Vulkan 的应用。Android XR Extensions for Unity 中的 Foveation(旧版)功能还支持内置渲染管线和 OpenGL ES。我们建议您尽可能使用 Unity 的凹凸透镜渲染功能,并注意在为 Android XR 构建时,建议同时使用 URP 和 Vulkan。 |

|

不适用 |

Android XR:无界参考空间 |

此功能将 XRInputSubsystem 跟踪原点模式设置为 Unbounded。无界表示 XRInputSubsystem 会跟踪所有与世界锚点(可能会发生变化)相关的 InputDevice。 |

|

不适用 |

环境混合模式 |

此功能可让您设置 XR 环境混合模式,该模式可控制在透视功能启用时,虚拟图像与现实世界环境的融合方式。 |

输入和互动

Android XR 支持多模态自然输入。

除了手部和眼球追踪之外,还支持 6DoF 控制器、鼠标和实体键盘等外围设备。这意味着,Android XR 应用应支持手部互动,并且不能假设所有设备都会配备控制器。

互动配置文件

Unity 使用互动配置文件来管理 XR 应用与各种 XR 设备和平台之间的通信方式。这些配置文件为不同的硬件配置确定了预期的输入和输出,从而在各种平台上实现了兼容性和一致的功能。通过启用互动配置文件,您可以帮助确保 XR 应用在不同设备上正常运行,保持一致的输入映射,并访问特定的 XR 功能。如需设置互动配置文件,请执行以下操作:

- 打开项目设置窗口(菜单:Edit > Project Settings)。

- 点击 XR 插件管理以展开插件部分(如有必要)。

- 在 XR 插件列表中选择 OpenXR。

- 在互动配置文件部分中,选择 + 按钮以添加配置文件。

- 从列表中选择要添加的个人资料。

手部互动

手部互动 (XR_EXT_hand_interaction) 由 OpenXR 插件提供,您可以通过启用手部互动配置文件在 Unity 输入系统中公开 <HandInteraction> 设备布局。将此互动配置文件用于由 OpenXR 定义的四种动作姿势(“捏合”“戳击”“瞄准”和“抓握”)支持的手部输入。如果您需要其他手部互动或手部跟踪功能,请参阅本页上的 XR Hands。

眼动交互

眼动追踪互动 (XR_EXT_eye_gaze_interaction) 由 OpenXR 插件提供,您可以使用此布局来检索扩展程序返回的眼部姿势数据(位置和旋转)。如需详细了解眼动追踪互动,请参阅 OpenXR 输入指南。

控制器交互

Android XR 支持 6DoF 控制器的 Oculus Touch 控制器配置文件。这两个配置文件均由 OpenXR 插件提供。

鼠标互动

Android XR 鼠标互动配置文件 (XR_ANDROID_mouse_interaction) 由 Android XR Extensions for Unity 提供。它在 Unity 输入系统中公开了 <AndroidXRMouse> 设备布局。

手掌姿势互动

OpenXR 插件支持手掌姿势互动 (XR_EXT_palm_pose),该功能可在 Unity 输入系统中公开 <PalmPose> 布局。

手掌姿势并非旨在替代可针对更复杂的使用情形执行手部跟踪的扩展程序或软件包;相反,它可用于放置应用特定的视觉内容,例如头像视觉效果。手掌姿势包括手掌位置和方向。

XR 手势

借助 XR Hands 软件包,您可以使用 XR_EXT_hand_tracking 和 XR_FB_hand_tracking_aim 访问手部追踪数据,并提供一个封装容器,用于将手部追踪中的手部关节数据转换为输入姿势。如需使用 XR Hands 软件包提供的功能,请启用手部跟踪子系统和 Meta 手部跟踪瞄准 OpenXR 功能。

![]()

如果您需要更精细的手部姿势或手部关节数据,或者需要使用自定义手势,XR hands 软件包会很有用。

如需了解详情,请参阅 Unity 的有关在项目中设置 XR Hands 的文档

面部跟踪置信度区域

XR_ANDROID_face_tracking 扩展程序可为三个面部区域提供置信度值:左上、右上和下部面孔。这些值介于 0(无置信度)到 1(最高置信度)之间,表示每个区域的人脸跟踪准确度。

您可以使用这些置信度值来逐步停用混合形状,或将视觉滤镜(例如模糊效果)应用于相应面部区域。对于基本停用,请停用相应面部区域中的混合形状。

“下半脸”区域表示眼睛下方的所有内容,包括嘴巴、下巴、脸颊和鼻子。上方的两个区域包括面部左右两侧的眼睛和眉毛区域。

以下 C# 代码段演示了如何在 Unity 脚本中访问和使用置信度数据:

using UnityEngine;

using Google.XR.Extensions;

public class FaceTrackingConfidence : MonoBehaviour

{

void Update()

{

if (!XRFaceTrackingFeature.IsFaceTrackingExtensionEnabled.HasValue)

{

DebugTextTopCenter.text = "XrInstance hasn't been initialized.";

return;

}

else if (!XRFaceTrackingFeature.IsFaceTrackingExtensionEnabled.Value)

{

DebugTextTopCenter.text = "XR_ANDROID_face_tracking is not enabled.";

return;

}

for (int x = 0; x < _faceManager.Face.ConfidenceRegions.Length; x++)

{

switch (x)

{

case (int)XRFaceConfidenceRegion.Lower:

regionText = "Bottom";

break;

case (int)XRFaceConfidenceRegion.LeftUpper:

regionText = DebugTextConfidenceLeft;

break;

case (int)XRFaceConfidenceRegion.RightUpper:

regionText = DebugTextConfidenceRight;

break;

}

}

}

如需了解详情,请参阅 Android XR Extensions for Unity 文档。

选择手部渲染方式

Android XR 支持两种手部渲染方式:手部网格和预制可视化工具。

手部网格

Android XR Unity 软件包包含一个手部网格功能,可用于访问 XR_ANDROID_hand_mesh extension。手部网格功能可为用户的手部提供网格。手网格包含表示手部几何形状的三角形的顶点。此功能旨在提供个性化的网格,用于直观呈现用户双手的真实几何形状。

XR Hands 预制件

XR Hands 软件包包含一个名为 Hands visualizer 的示例,其中包含完全绑定好的左手和右手,用于渲染适合上下文的用户手部表示形式。

系统手势

Android XR 包含一个系统手势,用于打开菜单,以便用户返回、打开启动器或获取正在运行的应用的概览。用户可以使用主手捏合手势来激活此系统菜单。

当用户与系统导航菜单互动时,应用只会响应头部跟踪事件。XR Hands 软件包可以检测用户何时执行特定操作,例如与此系统导航菜单互动。检查 AimFlags、SystemGesture 和 DominantHand 可让您了解何时执行此系统操作。如需详细了解 AimFlags,请参阅 Unity 的 Enum MetaAimFlags 文档。

XR 互动工具包

XR 交互工具包是一个基于组件的高级交互系统,用于创建 VR 和 AR 体验。它提供了一个框架,可从 Unity 输入事件中获取 3D 和界面互动。它支持触感反馈、视觉反馈和运动等互动任务。

OpenXR™ 和 OpenXR 徽标是 The Khronos Group Inc. 拥有的商标,已在中国、欧盟、日本和英国注册为商标。