Android and ChromeOS provide a variety of APIs to help you build apps that offer

users an exceptional stylus experience. The

MotionEvent class exposes

information about stylus interaction with the screen, including stylus pressure,

orientation, tilt, hover, and palm detection. Low-latency graphics and motion

prediction libraries enhance stylus on‑screen rendering to provide a

natural, pen‑and‑paper‑like experience.

MotionEvent

The MotionEvent class represents user input interactions such as the position

and movement of touch pointers on the screen. For stylus input, MotionEvent

also exposes pressure, orientation, tilt, and hover data.

Event data

To access MotionEvent data, set up an onTouchListener callback:

Kotlin

val onTouchListener = View.OnTouchListener { view, event ->

// Process motion event.

}

Java

View.OnTouchListener listener = (view, event) -> {

// Process motion event.

};

The listener receives MotionEvent objects from the system, so your app can

process them.

A MotionEvent object provides data related to the following aspects of a UI

event:

- Actions: Physical interaction with the device—touching the screen, moving a pointer over the screen surface, hovering a pointer over the screen surface

- Pointers: Identifiers of objects interacting with the screen—finger, stylus, mouse

- Axis: Type of data—x and y coordinates, pressure, tilt, orientation, and hover (distance)

Actions

To implement stylus support, you need to understand what action the user is performing.

MotionEvent provides a wide variety of ACTION constants that define motion

events. The most important actions for stylus include the following:

| Action | Description |

|---|---|

| ACTION_DOWN ACTION_POINTER_DOWN |

Pointer has made contact with the screen. |

| ACTION_MOVE | Pointer is moving on the screen. |

| ACTION_UP ACTION_POINTER_UP |

Pointer is not in contact with the screen anymore |

| ACTION_CANCEL | When previous or current motion set should be canceled. |

Your app can perform tasks like starting a new stroke when ACTION_DOWN

happens, drawing the stroke with ACTION_MOVE, and finishing the stroke when

ACTION_UP is triggered.

The set of MotionEvent actions from ACTION_DOWN to ACTION_UP for a given

pointer is called a motion set.

Pointers

Most screens are multi-touch: the system assigns a pointer for each finger, stylus, mouse, or other pointing object interacting with the screen. A pointer index enables you to get axis information for a specific pointer, like the position of the first finger touching the screen or the second.

Pointer indexes range from zero to the number of pointers returned by

MotionEvent#pointerCount()

minus 1.

Axis values of the pointers can be accessed with the getAxisValue(axis,

pointerIndex) method.

When the pointer index is omitted, the system returns the value for the first

pointer, pointer zero (0).

MotionEvent objects contain information about the type of pointer in use. You

can get the pointer type by iterating through the pointer indexes and calling

the

getToolType(pointerIndex)

method.

To learn more about pointers, see Handle multi-touch gestures.

Stylus inputs

You can filter for stylus inputs with

TOOL_TYPE_STYLUS:

Kotlin

val isStylus = TOOL_TYPE_STYLUS == event.getToolType(pointerIndex)

Java

boolean isStylus = TOOL_TYPE_STYLUS == event.getToolType(pointerIndex);

The stylus can also report that it is used as an eraser with

TOOL_TYPE_ERASER:

Kotlin

val isEraser = TOOL_TYPE_ERASER == event.getToolType(pointerIndex)

Java

boolean isEraser = TOOL_TYPE_ERASER == event.getToolType(pointerIndex);

Stylus axis data

ACTION_DOWN and ACTION_MOVE provide axis data about the stylus, namely x and

y coordinates, pressure, orientation, tilt, and hover.

To enable access to this data, the MotionEvent API provides

getAxisValue(int),

where the parameter is any of the following axis identifiers:

| Axis | Return value of getAxisValue() |

|---|---|

AXIS_X |

X coordinate of a motion event. |

AXIS_Y |

Y coordinate of a motion event. |

AXIS_PRESSURE |

For a touchscreen or touchpad, the pressure applied by a finger, stylus, or other pointer. For a mouse or trackball, 1 if the primary button is pressed, 0 otherwise. |

AXIS_ORIENTATION |

For a touchscreen or touchpad, the orientation of a finger, stylus, or other pointer relative to the vertical plane of the device. |

AXIS_TILT |

The tilt angle of the stylus in radians. |

AXIS_DISTANCE |

The distance of the stylus from the screen. |

For example, MotionEvent.getAxisValue(AXIS_X) returns the x coordinate for the

first pointer.

See also Handle multi-touch gestures.

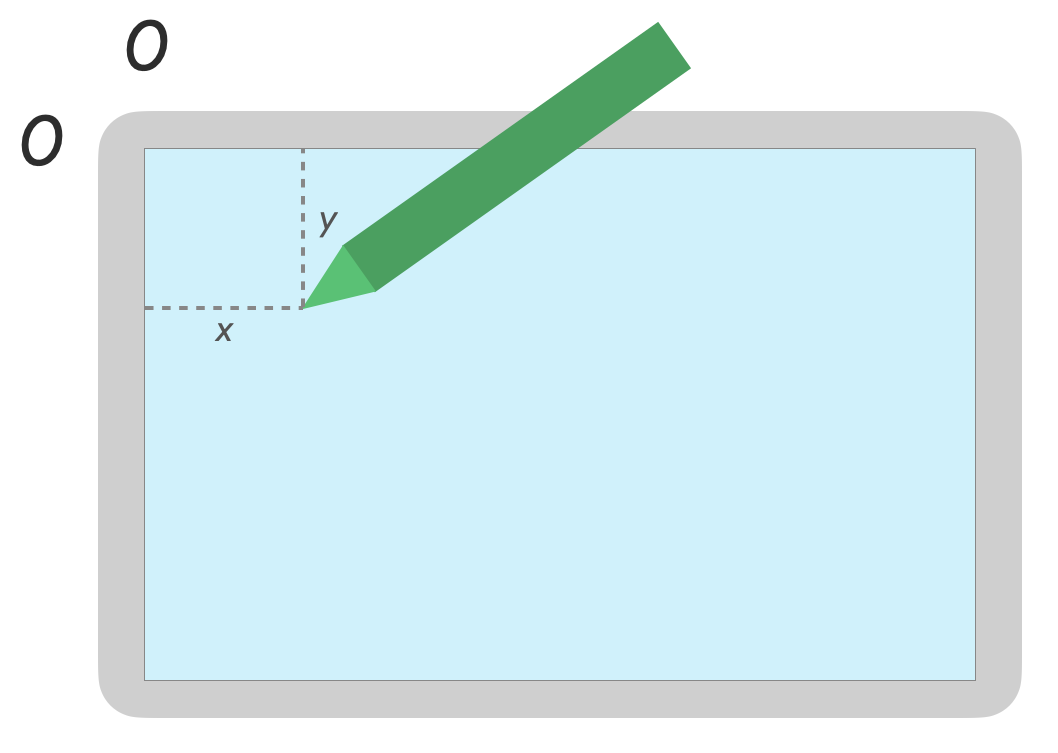

Position

You can retrieve the x and y coordinates of a pointer with the following calls:

MotionEvent#getAxisValue(AXIS_X)orMotionEvent#getX()MotionEvent#getAxisValue(AXIS_Y)orMotionEvent#getY()

Pressure

You can retrieve the pointer pressure with

MotionEvent#getAxisValue(AXIS_PRESSURE) or, for the first pointer,

MotionEvent#getPressure().

The pressure value for touchscreens or touchpads is a value between 0 (no pressure) and 1, but higher values can be returned depending on the screen calibration.

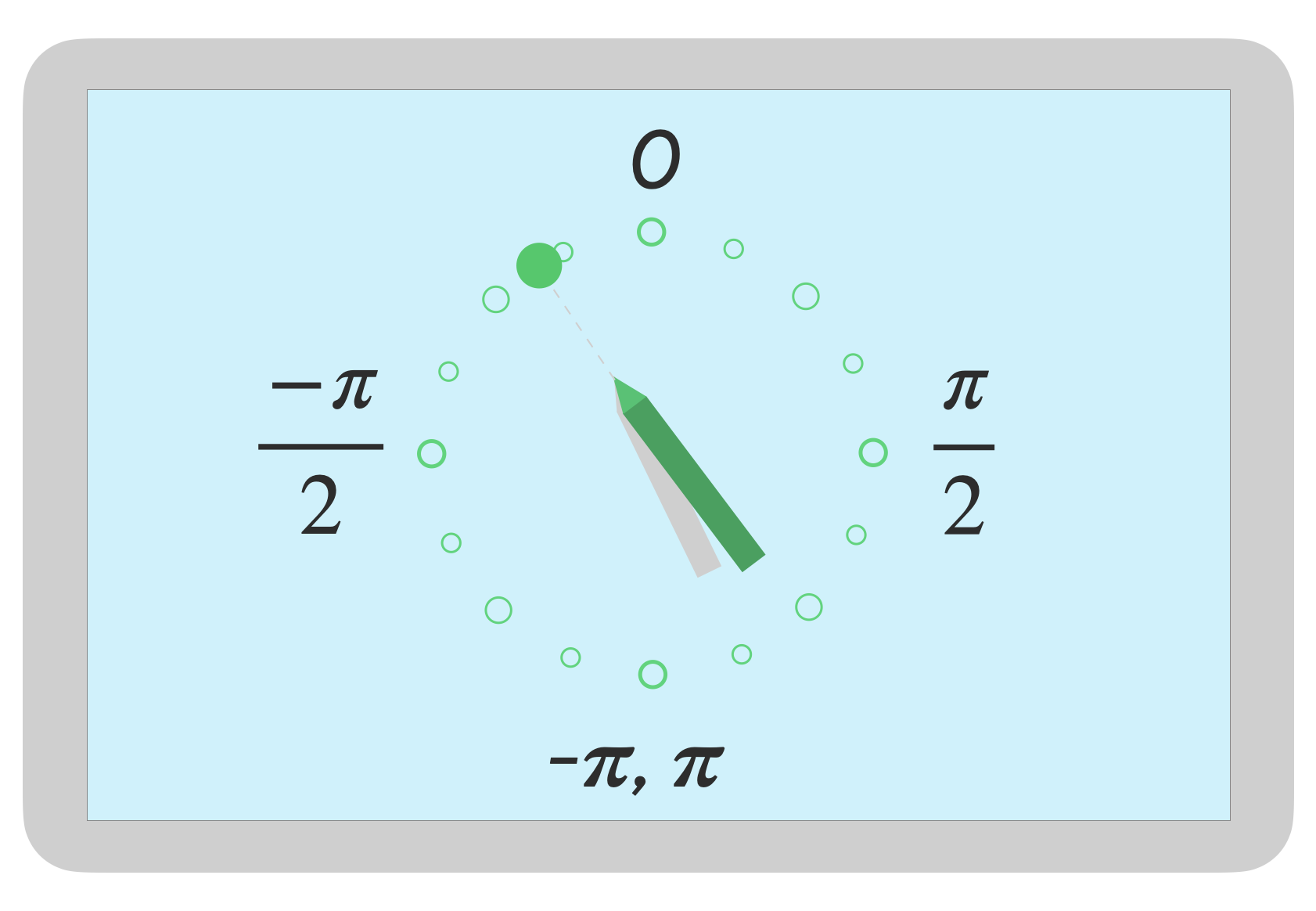

Orientation

Orientation indicates which direction the stylus is pointing.

Pointer orientation can be retrieved using getAxisValue(AXIS_ORIENTATION) or

getOrientation()

(for the first pointer).

For a stylus, the orientation is returned as a radian value between 0 to pi (𝛑) clockwise or 0 to -pi counterclockwise.

Orientation enables you to implement a real-life brush. For example, if the stylus represents a flat brush, the width of the flat brush depends on the stylus orientation.

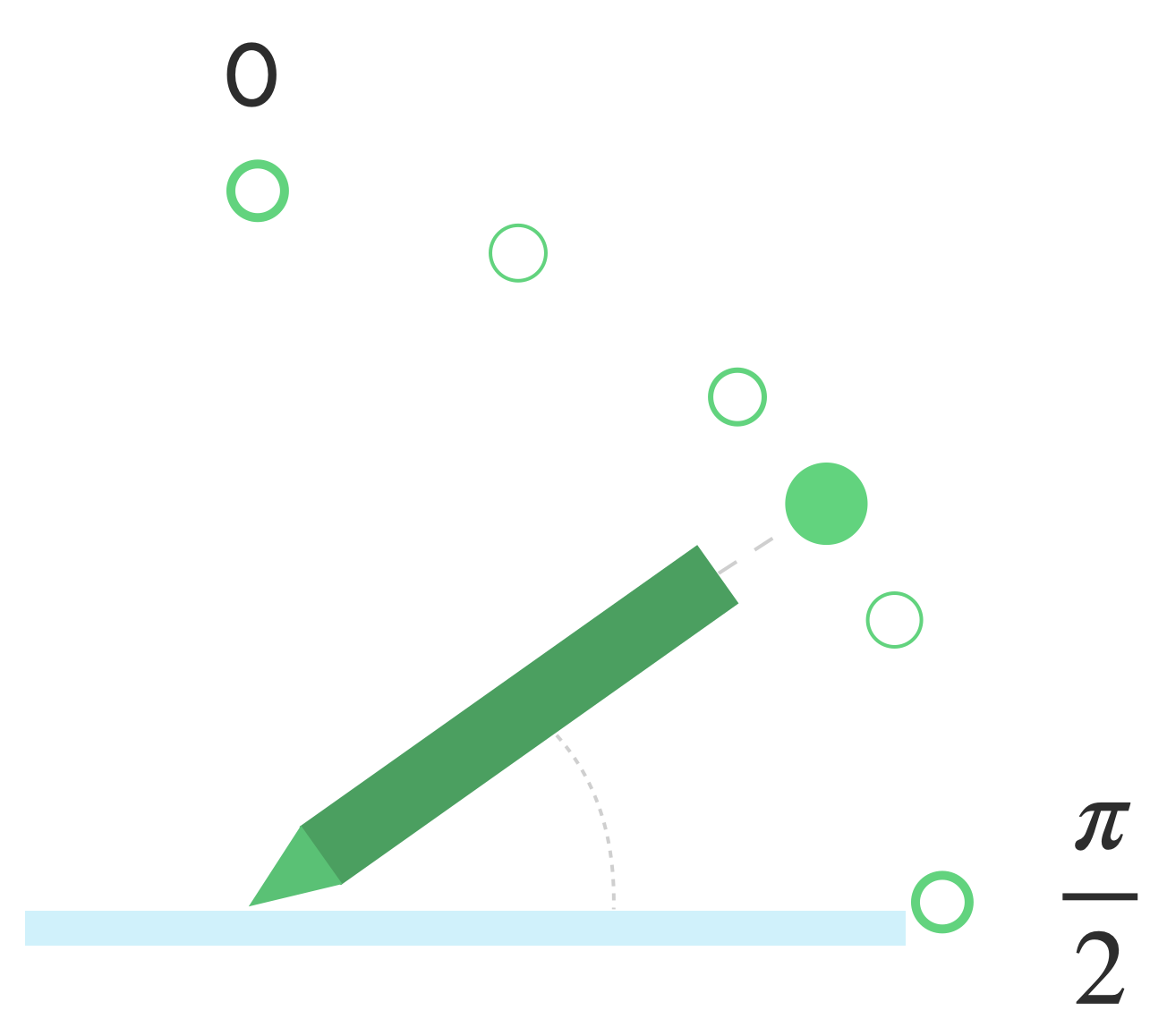

Tilt

Tilt measures the inclination of the stylus relative to the screen.

Tilt returns the positive angle of the stylus in radians, where zero is perpendicular to the screen and 𝛑/2 is flat on the surface.

The tilt angle can be retrieved using getAxisValue(AXIS_TILT) (no shortcut for

the first pointer).

Tilt can be used to reproduce as close as possible real-life tools, like mimicking shading with a tilted pencil.

Hover

The distance of the stylus from the screen can be obtained with

getAxisValue(AXIS_DISTANCE). The method returns a value from 0.0 (contact with

the screen) to higher values as the stylus moves away from the screen. The hover

distance between the screen and the nib (point) of the stylus depends on the

manufacturer of both the screen and the stylus. Because implementations can

vary, don't rely on precise values for app-critical functionality.

Stylus hover can be used to preview the size of the brush or indicate that a button is going to be selected.

Note: Compose provides modifiers that affect the interactive state of UI elements:

hoverable: Configure component to be hoverable using pointer enter and exit events.indication: Draws visual effects for this component when interactions occur.

Palm rejection, navigation, and unwanted inputs

Sometimes multi-touch screens can register unwanted touches, for example, when a

user naturally rests their hand on the screen for support while handwriting.

Palm rejection is a mechanism that detects this behavior and notifies you that

the last MotionEvent set should be canceled.

As a result, you must keep a history of user inputs so that the unwanted touches can be removed from the screen and the legitimate user inputs can be re-rendered.

ACTION_CANCEL and FLAG_CANCELED

ACTION_CANCEL and

FLAG_CANCELED are

both designed to inform you that the previous MotionEvent set should be

canceled from the last ACTION_DOWN, so you can, for example, undo the last

stroke for a drawing app for a given pointer.

ACTION_CANCEL

Added in Android 1.0 (API level 1)

ACTION_CANCEL indicates the previous set of motion events should be canceled.

ACTION_CANCEL is triggered when any of the following is detected:

- Navigation gestures

- Palm rejection

When ACTION_CANCEL is triggered, you should identify the active pointer with

getPointerId(getActionIndex()). Then remove the stroke created with that pointer from the input history, and re-render the scene.

FLAG_CANCELED

Added in Android 13 (API level 33)

FLAG_CANCELED

indicates that the pointer going up was an unintentional user touch. The flag is

typically set when the user accidentally touches the screen, such as by gripping

the device or placing the palm of the hand on the screen.

You access the flag value as follows:

Kotlin

val cancel = (event.flags and FLAG_CANCELED) == FLAG_CANCELED

Java

boolean cancel = (event.getFlags() & FLAG_CANCELED) == FLAG_CANCELED;

If the flag is set, you need to undo the last MotionEvent set, from the last

ACTION_DOWN from this pointer.

Like ACTION_CANCEL, the pointer can be found with getPointerId(actionIndex).

MotionEvent sets. Palm touch is canceled, and display is re-rendered.

Full screen, edge-to-edge, and navigation gestures

If an app is full screen and has actionable elements near the edge, such as the canvas of a drawing or note-taking app, swiping from the bottom of the screen to display the navigation or move the app to the background might result in an unwanted touch on the canvas.

To prevent gestures from triggering unwanted touches in your app, you can take

advantage of insets and

ACTION_CANCEL.

See also the Palm rejection, navigation, and unwanted inputs section.

Use the

setSystemBarsBehavior()

method and

BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

of

WindowInsetsController

to prevent navigation gestures from causing unwanted touch events:

Kotlin

// Configure the behavior of the hidden system bars.

windowInsetsController.systemBarsBehavior =

WindowInsetsControllerCompat.BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPEJava

// Configure the behavior of the hidden system bars. windowInsetsController.setSystemBarsBehavior( WindowInsetsControllerCompat.BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE );

To learn more about inset and gesture management, see:

- Hide system bars for immersive mode

- Ensure compatibility with gesture navigation

- Display content edge-to-edge in your app

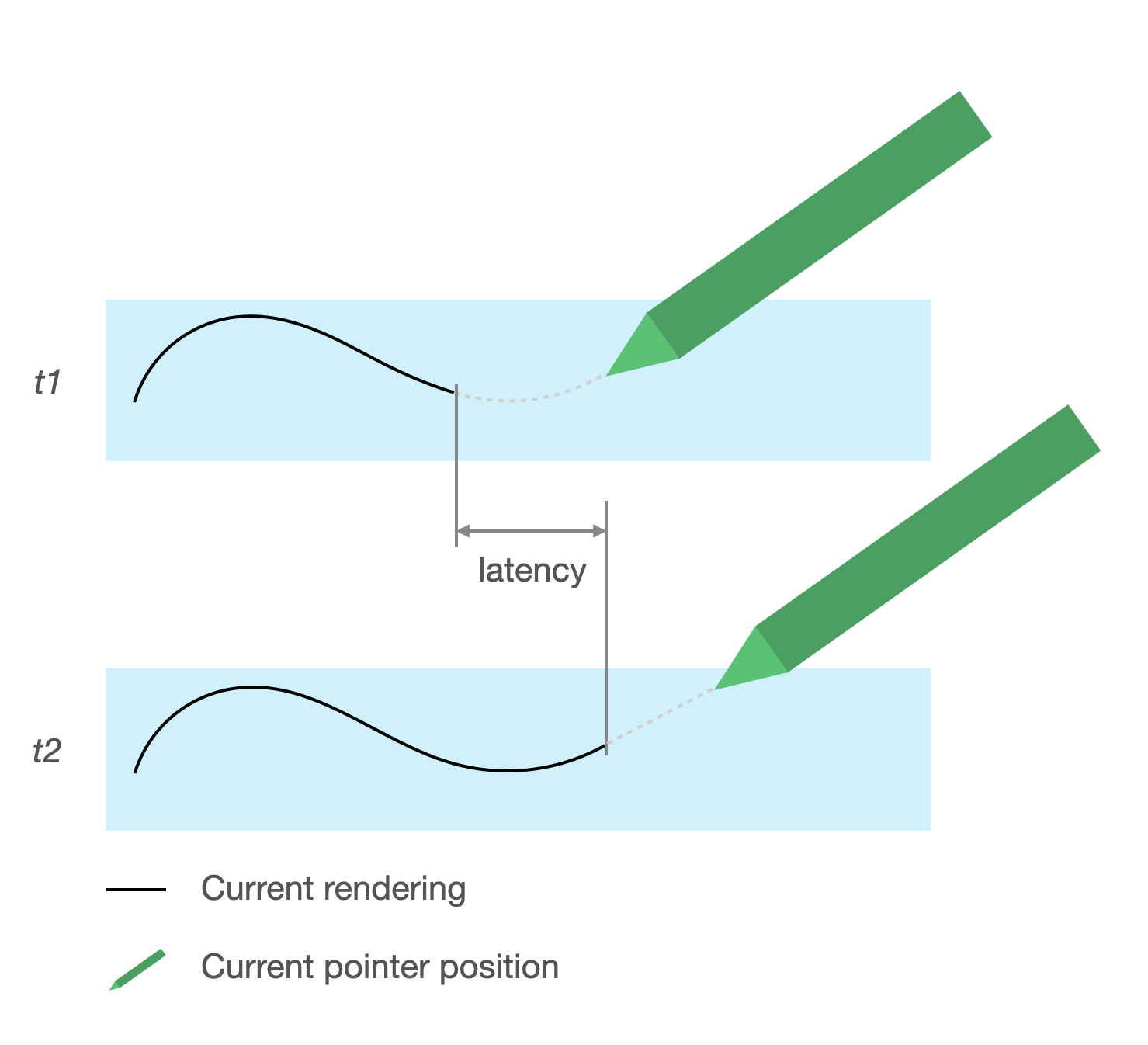

Low latency

Latency is the time required by the hardware, system, and application to process and render user input.

Latency = hardware and OS input processing + app processing + system compositing

- hardware rendering

Source of latency

- Registering stylus with touchscreen (hardware): Initial wireless connection when the stylus and OS communicate to be registered and synced.

- Touch sampling rate (hardware): The number of times per second a touchscreen checks whether a pointer is touching the surface, ranging from 60 to 1000Hz.

- Input processing (app): Applying color, graphic effects, and transformation on user input.

- Graphic rendering (OS + hardware): Buffer swapping, hardware processing.

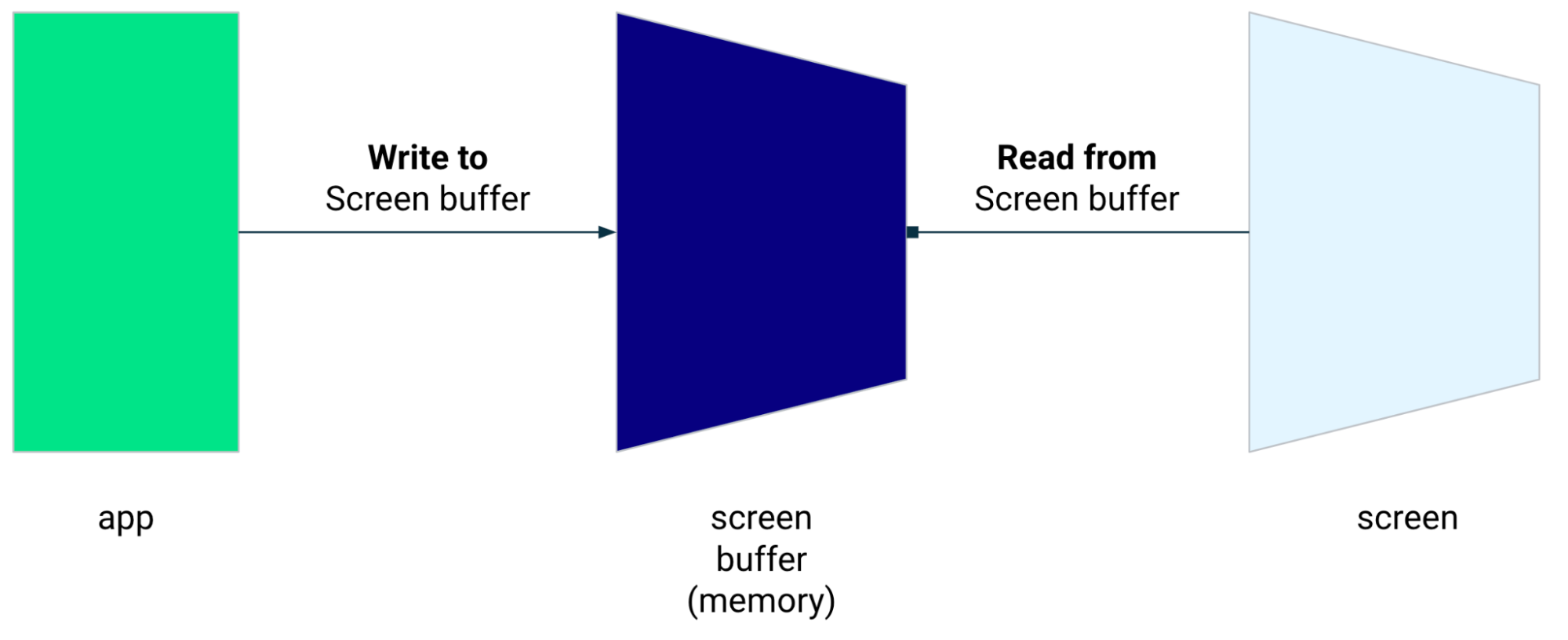

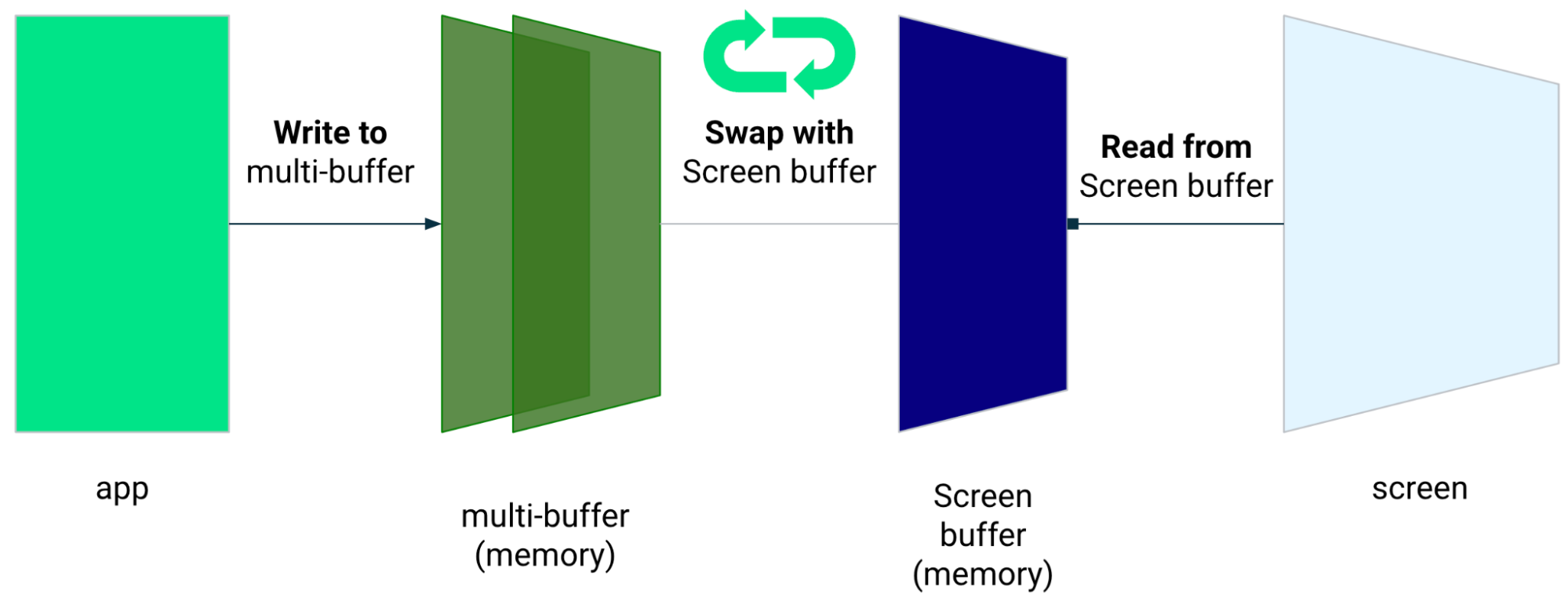

Low-latency graphics

The Jetpack low-latency graphics library reduces the processing time between user input and on-screen rendering.

The library reduces processing time by avoiding multi-buffer rendering and leveraging a front-buffer rendering technique, which means writing directly to the screen.

Front-buffer rendering

The front buffer is the memory the screen uses for rendering. It is the closest apps can get to drawing directly to the screen. The low-latency library enables apps to render directly to the front buffer. This improves performance by preventing buffer swapping, which can happen for regular multi-buffer rendering or double-buffer rendering (the most common case).

While front-buffer rendering is a great technique to render a small area of the screen, it is not designed to be used for refreshing the entire screen. With front-buffer rendering, the app is rendering content into a buffer from which the display is reading. As a result, there is the possibility of rendering artifacts or tearing (see below).

The low-latency library is available from Android 10 (API level 29) and higher and on ChromeOS devices running Android 10 (API level 29) and higher.

Dependencies

The low-latency library provides the components for front-buffer rendering

implementation. The library is added as a dependency in the app's module

build.gradle file:

dependencies {

implementation "androidx.graphics:graphics-core:1.0.0-alpha03"

}

GLFrontBufferRenderer callbacks

The low-latency library includes the

GLFrontBufferRenderer.Callback

interface, which defines the following methods:

The low-latency library is not opinionated about the type of data you use with

GLFrontBufferRenderer.

However, the library processes the data as a stream of hundreds of data points; and so, design your data to optimize memory usage and allocation.

Callbacks

To enable rendering callbacks, implement GLFrontBufferedRenderer.Callback and

override onDrawFrontBufferedLayer() and onDrawDoubleBufferedLayer().

GLFrontBufferedRenderer uses the callbacks to render your data in the most

optimized way possible.

Kotlin

val callback = object: GLFrontBufferedRenderer.Callback<DATA_TYPE> {

override fun onDrawFrontBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

param: DATA_TYPE

) {

// OpenGL for front buffer, short, affecting small area of the screen.

}

override fun onDrawMultiDoubleBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

params: Collection<DATA_TYPE>

) {

// OpenGL full scene rendering.

}

}Java

GLFrontBufferedRenderer.Callback<DATA_TYPE> callbacks = new GLFrontBufferedRenderer.Callback<DATA_TYPE>() { @Override public void onDrawFrontBufferedLayer(@NonNull EGLManager eglManager, @NonNull BufferInfo bufferInfo, @NonNull float[] transform, DATA_TYPE data_type) { // OpenGL for front buffer, short, affecting small area of the screen. } @Override public void onDrawDoubleBufferedLayer(@NonNull EGLManager eglManager, @NonNull BufferInfo bufferInfo, @NonNull float[] transform, @NonNull Collection<? extends DATA_TYPE> collection) { // OpenGL full scene rendering. } };

Declare an instance of GLFrontBufferedRenderer

Prepare the GLFrontBufferedRenderer by providing the SurfaceView and

callbacks you created earlier. GLFrontBufferedRenderer optimizes the rendering

to the front and double buffer using your callbacks:

Kotlin

var glFrontBufferRenderer = GLFrontBufferedRenderer<DATA_TYPE>(surfaceView, callbacks)

Java

GLFrontBufferedRenderer<DATA_TYPE> glFrontBufferRenderer = new GLFrontBufferedRenderer<DATA_TYPE>(surfaceView, callbacks);

Rendering

Front-buffer rendering starts when you call the

renderFrontBufferedLayer()

method, which triggers the onDrawFrontBufferedLayer() callback.

Double-buffer rendering resumes when you call the

commit()

function, which triggers the onDrawMultiDoubleBufferedLayer() callback.

In the example that follows, the process renders to the front buffer (fast

rendering) when the user starts drawing on the screen (ACTION_DOWN) and moves

the pointer around (ACTION_MOVE). The process renders to the double buffer

when the pointer leaves the surface of the screen (ACTION_UP).

You can use

requestUnbufferedDispatch()

to ask that the input system doesn't batch motion events but instead delivers

them as soon as they're available:

Kotlin

when (motionEvent.action) {

MotionEvent.ACTION_DOWN -> {

// Deliver input events as soon as they arrive.

view.requestUnbufferedDispatch(motionEvent)

// Pointer is in contact with the screen.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_MOVE -> {

// Pointer is moving.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_UP -> {

// Pointer is not in contact in the screen.

glFrontBufferRenderer.commit()

}

MotionEvent.CANCEL -> {

// Cancel front buffer; remove last motion set from the screen.

glFrontBufferRenderer.cancel()

}

}Java

switch (motionEvent.getAction()) { case MotionEvent.ACTION_DOWN: { // Deliver input events as soon as they arrive. surfaceView.requestUnbufferedDispatch(motionEvent); // Pointer is in contact with the screen. glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE); } break; case MotionEvent.ACTION_MOVE: { // Pointer is moving. glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE); } break; case MotionEvent.ACTION_UP: { // Pointer is not in contact in the screen. glFrontBufferRenderer.commit(); } break; case MotionEvent.ACTION_CANCEL: { // Cancel front buffer; remove last motion set from the screen. glFrontBufferRenderer.cancel(); } break; }

Rendering do's and don'ts

Small portions of the screen, handwriting, drawing, sketching.

Fullscreen update, panning, zooming. Can result in tearing.

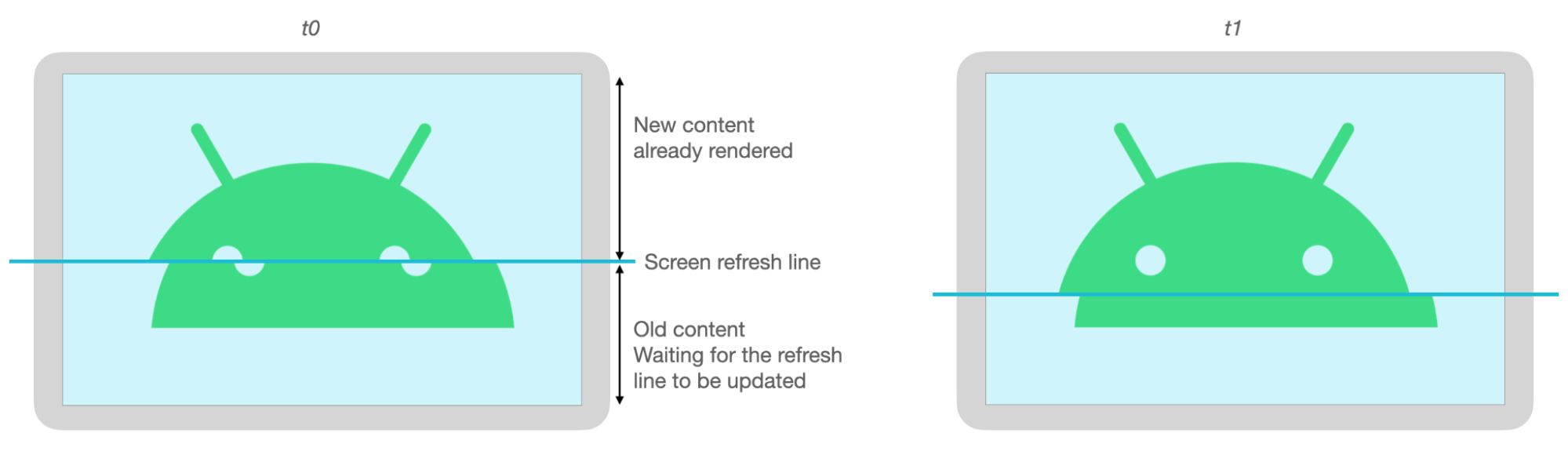

Tearing

Tearing happens when the screen refreshes while the screen buffer is being modified at the same time. A part of the screen shows new data, while another shows old data.

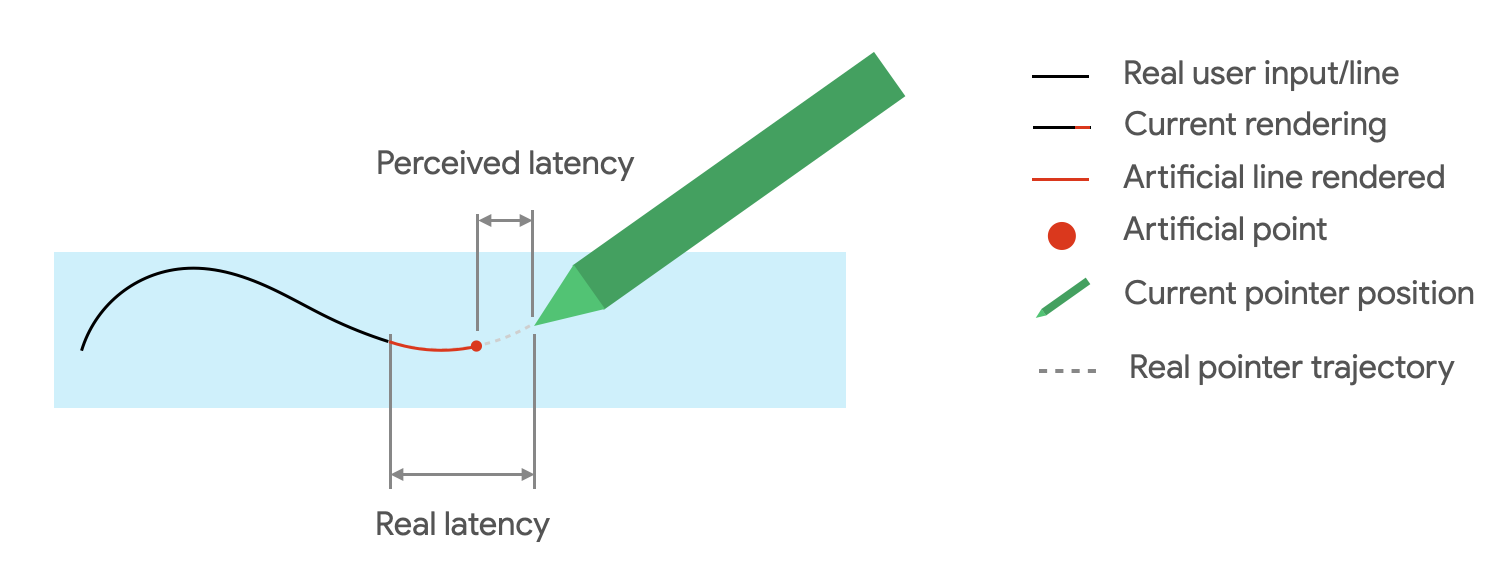

Motion prediction

The Jetpack motion prediction library reduces perceived latency by estimating the user's stroke path and providing temporary, artificial points to the renderer.

The motion prediction library gets real user inputs as MotionEvent objects.

The objects contain information about x and y coordinates, pressure, and time,

which are leveraged by the motion predictor to predict future MotionEvent

objects.

Predicted MotionEvent objects are only estimates. Predicted events can reduce

perceived latency, but predicted data must be replaced with actual MotionEvent

data once it is received.

The motion prediction library is available from Android 4.4 (API level 19) and higher and on ChromeOS devices running Android 9 (API level 28) and higher.

Dependencies

The motion prediction library provides the implementation of prediction. The

library is added as a dependency in the app's module build.gradle file:

dependencies {

implementation "androidx.input:input-motionprediction:1.0.0-beta01"

}

Implementation

The motion prediction library includes the

MotionEventPredictor

interface, which defines the following methods:

record(): StoresMotionEventobjects as a record of the user's actionspredict(): Returns a predictedMotionEvent

Declare an instance of MotionEventPredictor

Kotlin

var motionEventPredictor = MotionEventPredictor.newInstance(view)

Java

MotionEventPredictor motionEventPredictor = MotionEventPredictor.newInstance(surfaceView);

Feed the predictor with data

Kotlin

motionEventPredictor.record(motionEvent)

Java

motionEventPredictor.record(motionEvent);

Predict

Kotlin

when (motionEvent.action) {

MotionEvent.ACTION_MOVE -> {

val predictedMotionEvent = motionEventPredictor?.predict()

if(predictedMotionEvent != null) {

// use predicted MotionEvent to inject a new artificial point

}

}

}Java

switch (motionEvent.getAction()) { case MotionEvent.ACTION_MOVE: { MotionEvent predictedMotionEvent = motionEventPredictor.predict(); if(predictedMotionEvent != null) { // use predicted MotionEvent to inject a new artificial point } } break; }

Motion prediction do's and don'ts

Remove prediction points when a new predicted point is added.

Don't use prediction points for final rendering.

Note-taking apps

ChromeOS enables your app to declare some note-taking actions.

To register an app as a note-taking app on ChromeOS, see Input compatibility.

To register an app as a note-taking on Android, see Create a note-taking app.

Android 14 (API level 34), introduced the

ACTION_CREATE_NOTE

intent, which enables your app to start a note-taking activity on the lock

screen.

Digital ink recognition with ML Kit

With the ML Kit digital ink recognition, your app can recognize handwritten text on a digital surface in hundreds of languages. You can also classify sketches.

ML Kit provides the

Ink.Stroke.Builder

class to create Ink objects that can be processed by machine learning models

to convert handwriting to text.

In addition to handwriting recognition, the model is able to recognize gestures, such as delete and circle.

See Digital ink recognition to learn more.