نظاما التشغيل Android وChromeOS يوفران مجموعة متنوعة من واجهات برمجة التطبيقات لمساعدتك في إنشاء تطبيقات توفر

تجربة استثنائية لقلم الشاشة. تشير رسالة الأشكال البيانية

عرض صف واحد (MotionEvent)

معلومات حول تفاعل قلم الشاشة مع الشاشة، بما في ذلك ضغط قلم الشاشة،

الاتجاه والإمالة والتمرير واكتشاف راحة اليد رسومات وحركة في وقت الاستجابة المنخفض

تعمل مكتبات التنبؤات على تحسين العرض بقلم الشاشة على الشاشة لتوفير

تجربة طبيعية، تشبه القلم والورق.

MotionEvent

تمثّل الفئة MotionEvent تفاعلات المستخدمين التي أدخلها، مثل الموضع.

وحركة مؤشرات اللمس على الشاشة. إدخال قلم الشاشة: MotionEvent

يعرض أيضًا بيانات الضغط والاتجاه والإمالة والتمرير.

بيانات الأحداث

للوصول إلى عناصر قلم الرصاص MotionEvent، أضِف المُعدِّل pointerInteropFilter

إلى سطح الرسم. نفِّذ فئة ViewModel تتضمّن طريقة

تعالج أحداث الحركة، ثمّ نقْل الطريقة كتعبير لامبادي onTouchEvent لpointerInteropFilter:

@Composable @OptIn(ExperimentalComposeUiApi::class) fun DrawArea(modifier: Modifier = Modifier) { Canvas( modifier = modifier .clipToBounds() .pointerInteropFilter { viewModel.processMotionEvent(it) } ) { // Drawing code here. } }

يوفّر عنصر MotionEvent بيانات ذات صلة بالجوانب التالية في واجهة المستخدم

الحدث:

- الإجراءات: التفاعل الجسدي مع الجهاز — لمس الشاشة تحريك مؤشر الماوس فوق سطح الشاشة، وتمرير مؤشر الماوس فوق الشاشة مساحة عرض

- المؤشرات: معرّفات العناصر التي تتفاعل مع الشاشة - الإصبع قلم الشاشة، الماوس

- المحور: نوع البيانات — الإحداثيات x وy، والضغط، والإمالة، والاتجاه والتمرير (المسافة)

المهام

لتنفيذ دعم قلم الشاشة، يجب أن تفهم الإجراء الذي يتخذه المستخدم الأداء.

توفّر MotionEvent مجموعة متنوعة من ثوابت ACTION التي تعرّف الحركة.

أحداث. تشمل أهم الإجراءات المتعلقة بقلم الشاشة ما يلي:

| الإجراء | الوصف |

|---|---|

| ACTION_DOWN ACTION_POINTER_DOWN |

تواصل المؤشر مع الشاشة. |

| الإجراء_MOVE | يتحرك المؤشر على الشاشة. |

| ACTION_UP ACTION_POINTER_UP |

لم يعُد المؤشر على اتصال بالشاشة. |

| إلغاء الإجراء | عند الحاجة إلى إلغاء مجموعة الحركة السابقة أو الحالية. |

يمكن لتطبيقك تنفيذ مهام مثل بدء ضربة جديدة عندما ACTION_DOWN.

ما يحدث، لرسم الحد الخارجي باستخدام ACTION_MOVE, وإنهاء الحد الخارجي عندما

يتم تشغيل ACTION_UP.

مجموعة إجراءات MotionEvent من ACTION_DOWN إلى ACTION_UP لنوع محدّد

مؤشر يسمى مجموعة الحركة.

المؤشرات

معظم الشاشات تعمل باللمس المتعدد: يعين النظام مؤشرًا لكل إصبع، قلم الشاشة أو الماوس أو أي عنصر تأشير آخر يتفاعل مع الشاشة. مؤشر من الحصول على معلومات المحور لمؤشر معين، مثل موضع الإصبع الأول عند لمس الشاشة أو في الشاشة الثانية.

تتراوح فهارس المؤشر من صفر إلى عدد المؤشرات التي تعرضها

MotionEvent#pointerCount()

ناقص 1.

يمكن الوصول إلى قيم المحور للمؤشرات باستخدام الطريقة getAxisValue(axis,

pointerIndex).

عند حذف فهرس المؤشر، يعرض النظام قيمة

مؤشر، مؤشر صفر (0).

تحتوي MotionEvent عناصر على معلومات عن نوع المؤشر المستخدَم. إِنْتَ

يمكن الحصول على نوع المؤشر من خلال التكرار خلال فهارس المؤشر واستدعاء

الـ

getToolType(pointerIndex)

.

لمزيد من المعلومات حول المؤشرات، اطّلِع على المقالة التعامل مع ميزة "النقر المتعدد" الإيماءات.

إدخالات قلم الشاشة

يمكنك تصفية إدخالات قلم الشاشة باستخدام

TOOL_TYPE_STYLUS:

val isStylus = TOOL_TYPE_STYLUS == event.getToolType(pointerIndex)

يمكن لقلم الشاشة أيضًا الإبلاغ عن استخدامه كممحاة مع

TOOL_TYPE_ERASER:

val isEraser = TOOL_TYPE_ERASER == event.getToolType(pointerIndex)

بيانات محور قلم الشاشة

يوفر كل من ACTION_DOWN وACTION_MOVE بيانات محور حول قلم الشاشة، أي x

إحداثيات y والضغط والاتجاه والإمالة والتمرير.

لإتاحة الوصول إلى هذه البيانات، توفّر واجهة برمجة التطبيقات MotionEvent

getAxisValue(int),

حيث تكون المعلمة أيًا من معرّفات المحاور التالية:

| Axis | القيمة المعروضة getAxisValue() |

|---|---|

AXIS_X |

الإحداثي السيني (X) لحدث حركة. |

AXIS_Y |

الإحداثي الصادي (Y) لحدث حركة. |

AXIS_PRESSURE |

بالنسبة إلى الشاشة التي تعمل باللمس أو لوحة اللمس، يتم تطبيق الضغط بإصبع أو قلم شاشة أو مؤشر آخر. بالنسبة للماوس أو كرة التعقب، 1 في حالة الضغط على الزر الأساسي، 0 في الحالات الأخرى. |

AXIS_ORIENTATION |

بالنسبة إلى الشاشة التي تعمل باللمس أو لوحة اللمس، اتجاه الإصبع أو قلم الشاشة أو أي مؤشر آخر بالنسبة إلى المستوى العمودي للجهاز |

AXIS_TILT |

زاوية إمالة قلم الشاشة بوحدات الراديان. |

AXIS_DISTANCE |

مسافة قلم الشاشة عن الشاشة |

على سبيل المثال، تعرض MotionEvent.getAxisValue(AXIS_X) الإحداثي x

المؤشر الأول.

راجِع أيضًا التعامل مع ميزة اللمس المتعدد الإيماءات.

الموضع

يمكنك استرداد إحداثيي x وy لمؤشر باستخدام الاستدعاءات التالية:

MotionEvent#getAxisValue(AXIS_X)أوMotionEvent#getX()MotionEvent#getAxisValue(AXIS_Y)أوMotionEvent#getY()

الضغط

يمكنك استرداد ضغط المؤشر باستخدام

MotionEvent#getAxisValue(AXIS_PRESSURE) أو، بالنسبة للمؤشر الأول،

MotionEvent#getPressure()

قيمة الضغط للشاشات التي تعمل باللمس أو لوحات اللمس هي قيمة بين 0 (لا الضغط) و1، ولكن يمكن عرض قيم أعلى اعتمادًا على الشاشة للمعايرة.

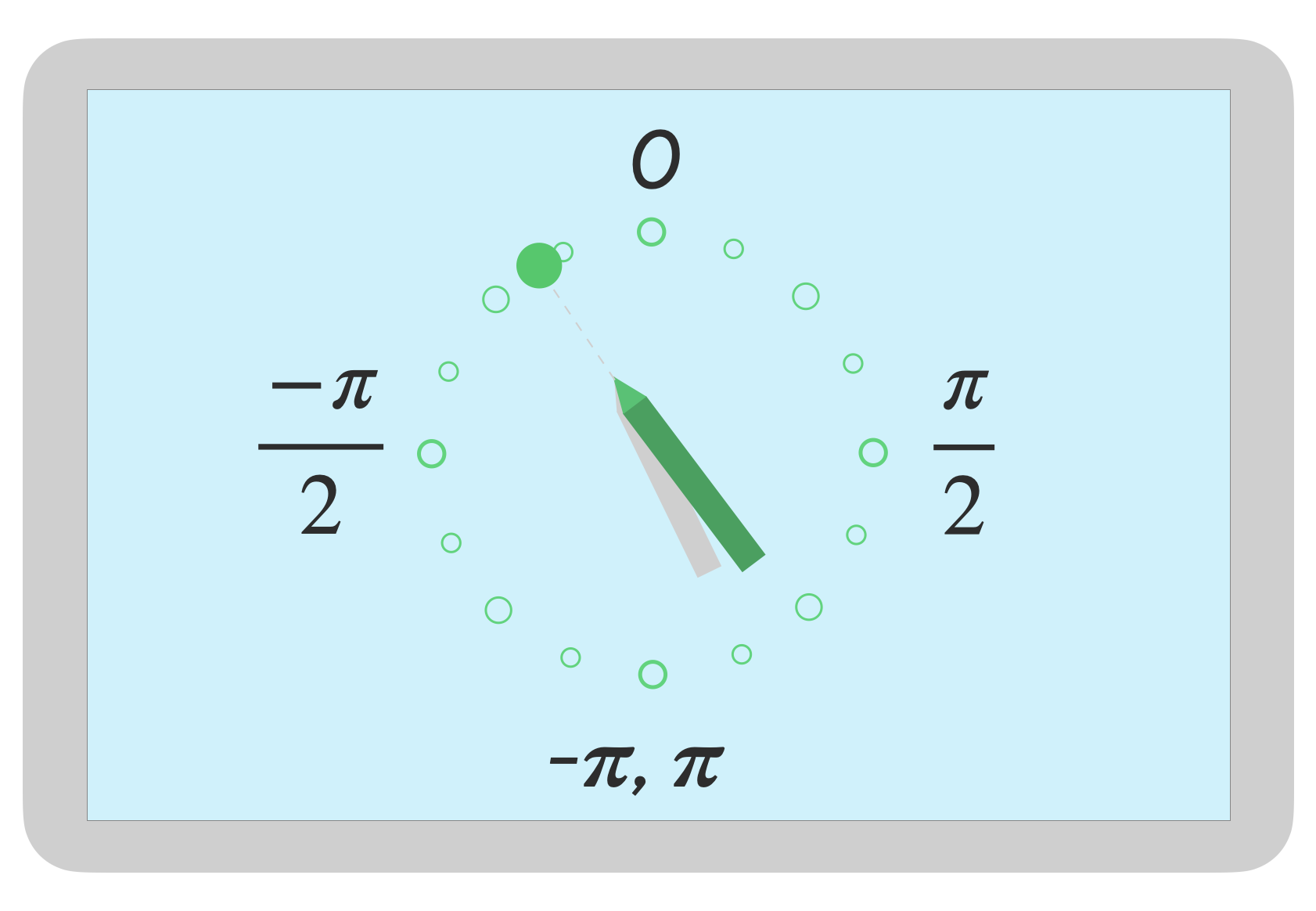

الاتجاه

يشير الاتجاه إلى الاتجاه الذي يشير إليه قلم الشاشة.

يمكن استرداد اتجاه المؤشر باستخدام السمة getAxisValue(AXIS_ORIENTATION) أو

getOrientation()

(للمؤشر الأول).

بالنسبة إلى قلم الشاشة، يتم عرض الاتجاه كقيمة راديان تتراوح بين 0 وباي (باي) في اتجاه عقارب الساعة أو من 0 إلى باي عكس عقارب الساعة.

يتيح لك التوجيه استخدام فرشاة حقيقية. على سبيل المثال، إذا كانت قيمة يمثل قلم الشاشة فرشاة مسطحة، ويعتمد عرض الفرشاة المسطحة على اتجاه قلم الشاشة.

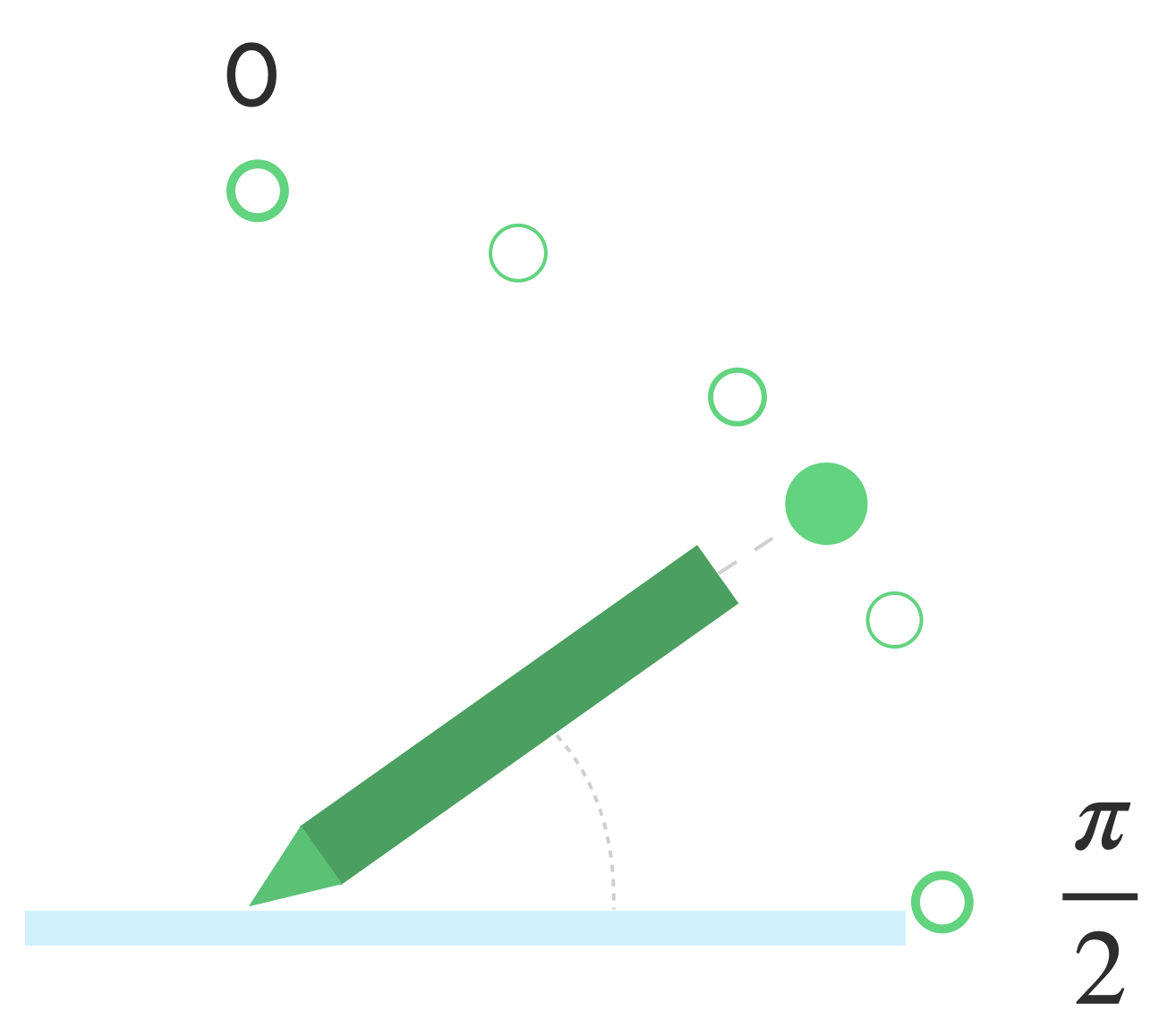

إمالة

تقيس الإمالة ميل قلم الشاشة بالنسبة إلى الشاشة.

تعرض الإمالة الزاوية الموجبة لقلم الشاشة في وحدات الراديان، حيث يكون الصفر يكون متعامدًا مع الشاشة وتكون pi/2 مسطحًا على السطح.

يمكن استرداد زاوية الإمالة باستخدام getAxisValue(AXIS_TILT) (لا يوجد اختصار

المؤشر الأول).

يمكن استخدام الإمالة لإعادة إنتاج أقرب ما يمكن من الأدوات الواقعية، مثل محاكاة التظليل بقلم رصاص مائل.

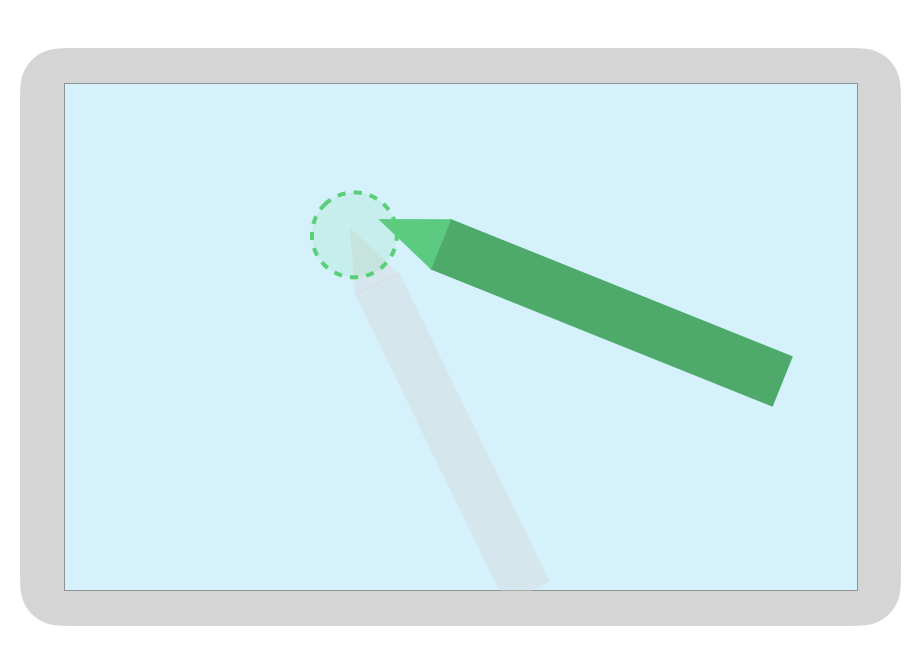

تمرير مؤشر الماوس

يمكن تحديد مسافة قلم الشاشة من الشاشة باستخدام

getAxisValue(AXIS_DISTANCE) تُرجع الطريقة قيمة من 0.0 (contact مع

الشاشة) إلى قيم أعلى كلما تحرك قلم الشاشة بعيدًا عن الشاشة. التمرير

تعتمد المسافة بين الشاشة وطرف (نقطة) قلم الشاشة على

الشركة المصنّعة للشاشة وقلم الشاشة. نظرًا لأن عمليات التنفيذ يمكن

مختلفة، لا تعتمد على قيم دقيقة للوظائف المهمة للتطبيقات.

يمكن استخدام التمرير فوق قلم الشاشة لمعاينة حجم الفرشاة أو الإشارة إلى الذي سيتم تحديده.

ملاحظة: توفر ميزة Compose مُعدِّلات تؤثر في الحالة التفاعلية لعناصر واجهة المستخدم:

hoverable: اضبط المكوِّن ليكون قابلاً للتمرير باستخدام أحداث الدخول والخروج من خلال المؤشر.indication: يرسم التأثيرات المرئية لهذا المكوِّن عند حدوث تفاعلات.

رفض راحة اليد والتنقّل والإدخالات غير المرغوب فيها

في بعض الأحيان، يمكن لشاشات اللمس المتعددة أن تسجل اللمسات غير المرغوب فيها، على سبيل المثال، عندما

المستخدم بشكل طبيعي يضع يده على الشاشة للحصول على المساعدة أثناء الكتابة بخط اليد.

تقنية رفض راحة اليد هي آلية لرصد هذا السلوك وإعلامك بأنّ

يجب إلغاء مجموعة MotionEvent الأخيرة.

نتيجةً لذلك، يجب الاحتفاظ بسجلّ من البيانات التي أدخلها المستخدم كي لا تتأثر التأثيرات غير المرغوب فيها من الشاشة ويمكن أن تتم إزالة إدخالات المستخدم المشروعة وإعادة عرضهما.

ACTION_CANCEL وFLAG_CANCELED

ACTION_CANCEL و

FLAG_CANCELED هي

كلاهما لإخبارك بأن مجموعة MotionEvent السابقة ينبغي

قد تم إلغاؤها من آخر ACTION_DOWN، لذا يمكنك مثلاً التراجع عن آخر

سُمك خط لتطبيق رسم لمؤشر معين.

إلغاء الإجراء

تمت الإضافة في الإصدار 1.0 من نظام التشغيل Android (المستوى 1 من واجهة برمجة التطبيقات)

يشير الرمز ACTION_CANCEL إلى أنّه يجب إلغاء المجموعة السابقة من أحداث الحركة.

يتم تشغيل ACTION_CANCEL عند رصد أيٍّ مما يلي:

- إيماءات التنقل

- راحة اليد

عند تشغيل ACTION_CANCEL، يجب تحديد المؤشر النشط باستخدام

getPointerId(getActionIndex()) بعد ذلك، أزِل الحد الخارجي الذي تم إنشاؤه باستخدام هذا المؤشر من سجلّ الإدخال وأعِد عرض المشهد.

تم إلغاء الإشارة

تمت الإضافة في Android 13 (المستوى 33)

FLAG_CANCELED

يشير إلى أن المؤشر للأعلى كان لمسة مستخدم غير مقصودة. العلم هو

عادةً ما يتم ضبطها عندما يلمس المستخدم الشاشة عن طريق الخطأ، عن طريق الإمساك مثلاً

الجهاز أو وضع راحة اليد على الشاشة.

يمكنك الوصول إلى قيمة العلامة على النحو التالي:

val cancel = (event.flags and FLAG_CANCELED) == FLAG_CANCELED

في حال ضبط العلامة، يجب التراجع عن آخر مجموعة MotionEvent، من آخر مجموعة

ACTION_DOWN من هذا المؤشر.

مثل ACTION_CANCEL، يمكن العثور على المؤشر في getPointerId(actionIndex).

MotionEvent مجموعة. تم إلغاء ميزة "لمس راحة اليد" وتتم إعادة عرض الشاشة.

إيماءات ملء الشاشة، ومن الحافة إلى الحافة، وإيماءات التنقّل

إذا كان التطبيق في وضع ملء الشاشة وبه عناصر قابلة للتنفيذ بالقرب من الحافة، مثل لوحة رسم أو تطبيق تدوين ملاحظات، مرِّر سريعًا من أسفل الشاشة إلى عرض التنقل أو نقل التطبيق إلى الخلفية إلى اللمسة غير المرغوب فيها على لوحة الرسم.

لمنع الإيماءات من تشغيل اللمسات غير المرغوب فيها في تطبيقك، يمكنك:

الاستفادة من المجموعات

ACTION_CANCEL

يمكنك أيضًا الاطّلاع على رفض راحة اليد والتنقّل والإدخالات غير المرغوب فيها. .

يمكنك استخدام

setSystemBarsBehavior()

و

BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

من

WindowInsetsController

لمنع إيماءات التنقل من التسبب في أحداث لمس غير مرغوب فيها:

// Configure the behavior of the hidden system bars.

windowInsetsController.systemBarsBehavior =

WindowInsetsControllerCompat.BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

لمزيد من المعلومات حول إدارة الأجزاء الداخلية والإيماءات، اطّلِع على:

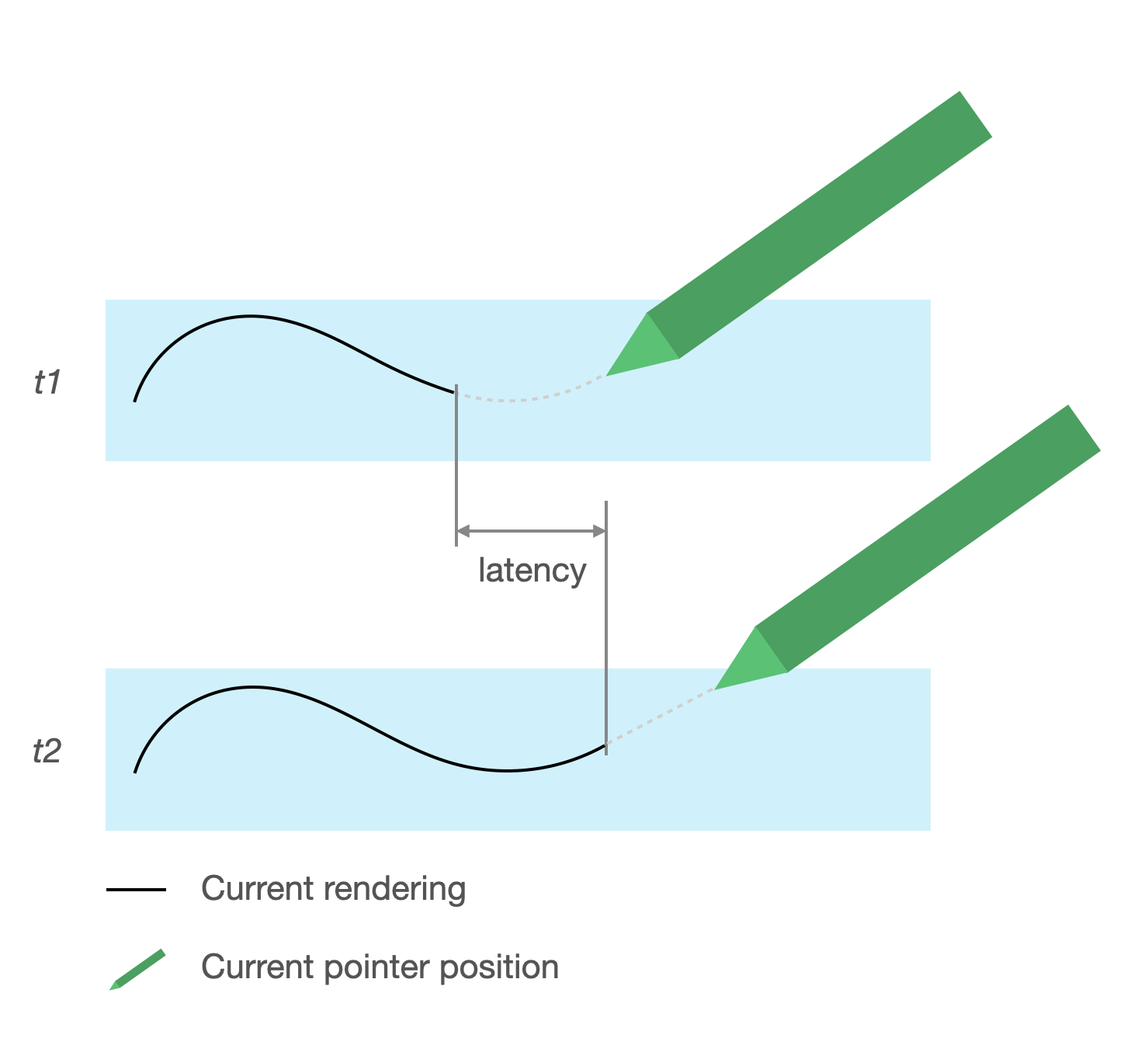

وقت استجابة بطيء

إنّ وقت الاستجابة هو الوقت الذي تحتاجه الأجهزة والنظام والتطبيق لمعالجتها وعرض البيانات التي أدخلها المستخدم

وقت الاستجابة = معالجة إدخال الأجهزة ونظام التشغيل + معالجة التطبيقات + تركيب النظام

- عرض الأجهزة

مصدر وقت الاستجابة

- تسجيل قلم الشاشة باستخدام شاشة تعمل باللمس (الأجهزة): اتصال لاسلكي مبدئي عند اتصال قلم الشاشة ونظام التشغيل ليتم التسجيل والمزامنة.

- معدل أخذ العينات باللمس (الأجهزة): عدد مرات ظهور الشاشة التي تعمل باللمس في الثانية يتحقق مما إذا كان المؤشر يلمس السطح، يتراوح بين 60 و1000 هرتز.

- معالجة الإدخال (التطبيقات): تطبيق الألوان والتأثيرات الرسومية والتحويل على البيانات التي أدخلها المستخدم

- عرض الرسومات (نظام التشغيل + الأجهزة): تبديل التخزين المؤقت، ومعالجة الأجهزة

رسومات ذات وقت استجابة سريع

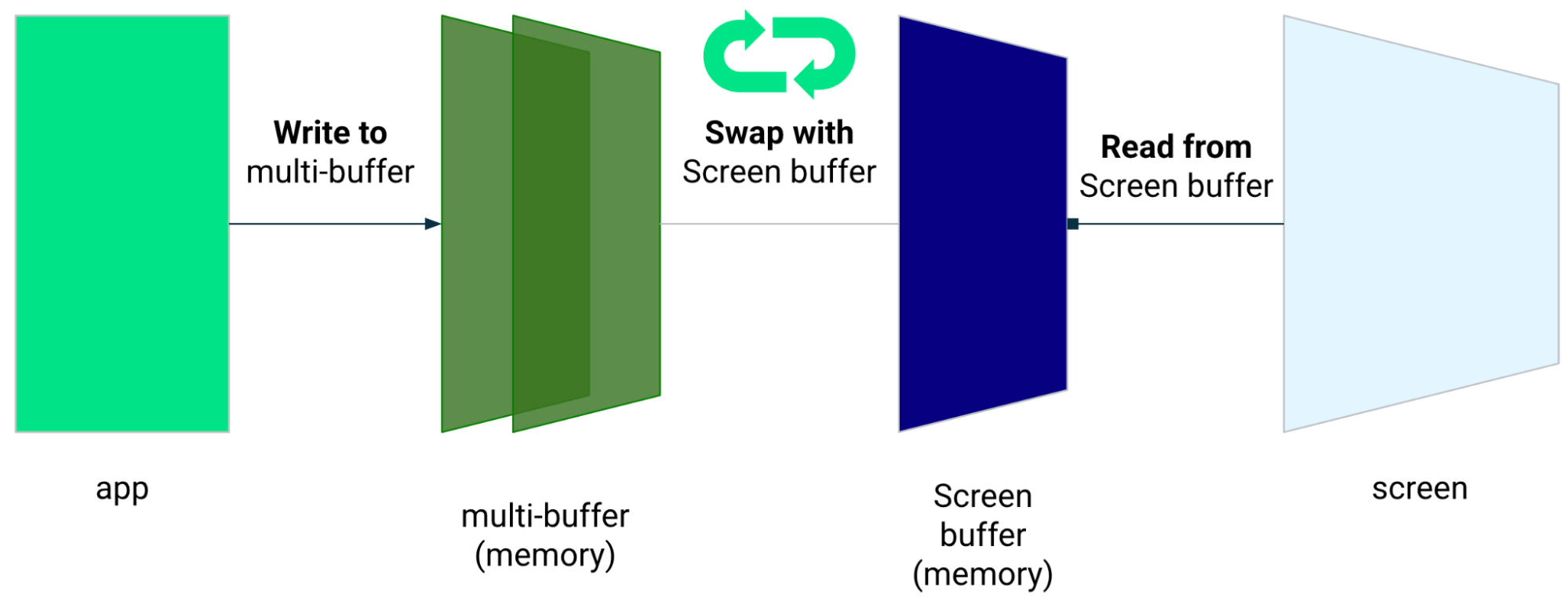

مكتبة رسومات بوقت الاستجابة المنخفض ويقلل من وقت المعالجة بين البيانات التي يُدخلها المستخدم والعرض على الشاشة.

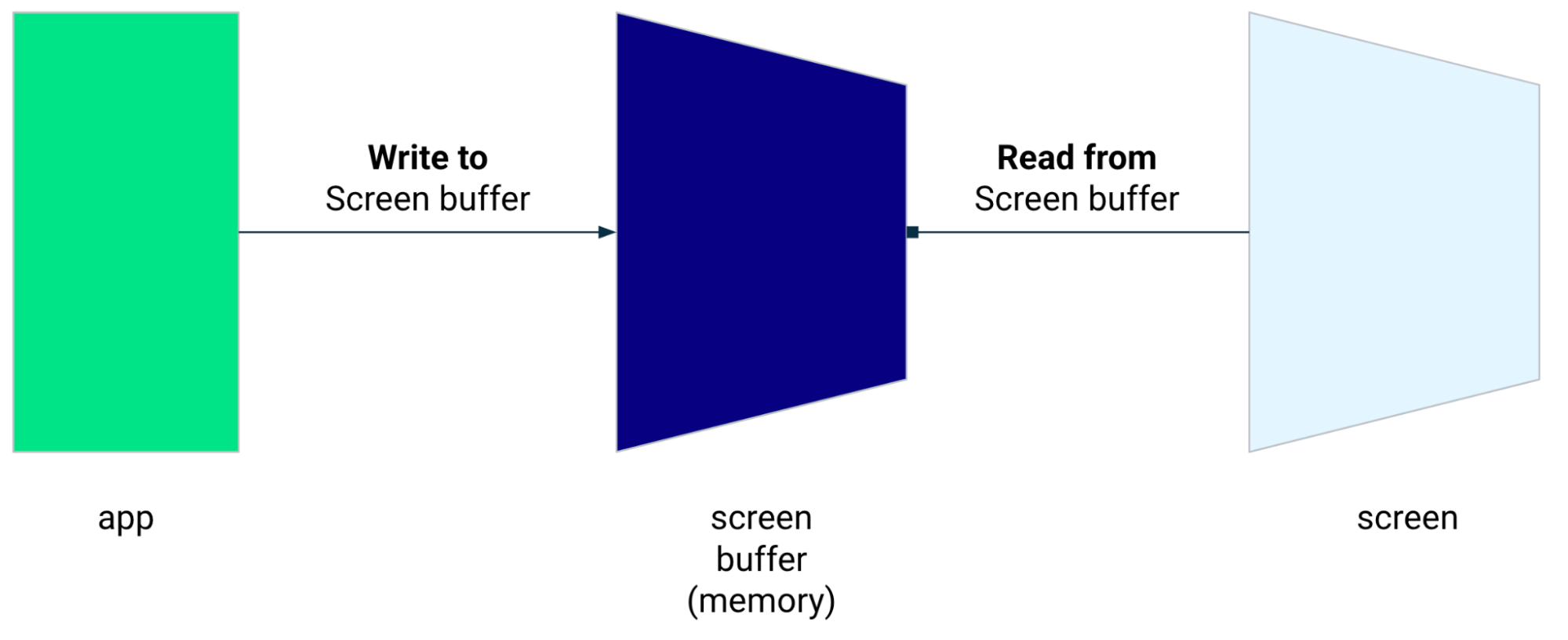

تقلل المكتبة وقت المعالجة من خلال تجنب العرض متعدد المخزن المؤقت الاستفادة من تقنية عرض الواجهة الأمامية، مما يعني الكتابة مباشرة إلى الشاشة.

عرض المخزن المؤقت

المخزن المؤقت الأمامي هو الذاكرة التي تستخدمها الشاشة للعرض. وهو الأقرب يمكن للتطبيقات الرسم مباشرة على الشاشة. تتيح مكتبة وقت الاستجابة السريع التطبيقات لعرضها مباشرةً إلى المخزن المؤقت الأمامي يؤدي هذا إلى تحسين الأداء من خلال ما يمنع تبديل المخزن المؤقت، والذي يمكن أن يحدث مع العرض المنتظم للمخزن المؤقت المتعدد أو العرض بمخزن مؤقت مزدوج (الحالة الأكثر شيوعًا).

وبالرغم من أن العرض المخزّن الأمامي تقنية رائعة لعرض مساحة صغيرة من الشاشة، فهي غير مصممة لاستخدامها في تحديث الشاشة بأكملها. مع عرض المخزن المؤقت الأمامي، يعرض التطبيق المحتوى في مخزن مؤقت الذي تتم قراءته على الشاشة. ونتيجة لذلك، هناك إمكانية عرض أو تمزيقه (انظر أدناه).

تتوفّر مكتبة وقت الاستجابة السريع لنظام التشغيل Android 10 (المستوى 29 لواجهة برمجة التطبيقات) والإصدارات الأحدث. وعلى أجهزة ChromeOS التي تعمل بنظام التشغيل Android 10 (المستوى 29 لواجهة برمجة التطبيقات) والإصدارات الأحدث.

التبعيات

توفر مكتبة وقت الاستجابة السريع مكونات عرض المخزن المؤقت الأمامي

التنفيذ. عند إضافة المكتبة كملحق في وحدة التطبيق

ملف build.gradle:

dependencies {

implementation "androidx.graphics:graphics-core:1.0.0-alpha03"

}

استدعاءات GLFrontBufferRenderer

تتضمن مكتبة وقت الاستجابة السريع

GLFrontBufferRenderer.Callback

التي تحدد الطرق التالية:

لا تتمحور مكتبة وقت الاستجابة السريع حول نوع البيانات التي تستخدمها مع

GLFrontBufferRenderer

ومع ذلك، تعالج المكتبة البيانات كتدفق لمئات نقاط البيانات؛ وبالتالي، صمِّم بياناتك لتحسين استخدام الذاكرة وتخصيصها.

طلبات معاودة الاتصال

لتفعيل عرض طلبات معاودة الاتصال، يجب تنفيذ GLFrontBufferedRenderer.Callback و

إلغاء onDrawFrontBufferedLayer() وonDrawDoubleBufferedLayer().

يستخدم GLFrontBufferedRenderer عمليات الاستدعاء لعرض بياناتك بأكبر قدر ممكن

محسَّنة بقدر الإمكان.

val callback = object: GLFrontBufferedRenderer.Callback<DATA_TYPE> {

override fun onDrawFrontBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

param: DATA_TYPE

) {

// OpenGL for front buffer, short, affecting small area of the screen.

}

override fun onDrawMultiDoubleBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

params: Collection<DATA_TYPE>

) {

// OpenGL full scene rendering.

}

}

تعريف نسخة افتراضية من GLFrontBufferedRenderer

عليك إعداد "GLFrontBufferedRenderer" من خلال تقديم SurfaceView

عمليات الاستدعاء التي قمت بإنشائها مسبقًا. يتم تحسين العرض من خلال "GLFrontBufferedRenderer".

إلى المقدمة والمخزن المؤقت المزدوج باستخدام عمليات الاستدعاء:

var glFrontBufferRenderer = GLFrontBufferedRenderer<DATA_TYPE>(surfaceView, callbacks)

العرض

يبدأ عرض المخزن المؤقت الأمامي عند استدعاء

renderFrontBufferedLayer()

، الأمر الذي يؤدي إلى تشغيل استدعاء onDrawFrontBufferedLayer().

ويتم استئناف العرض في ذاكرة التخزين المؤقت المزدوجة عند استدعاء

commit()

التي تؤدي إلى تشغيل استدعاء onDrawMultiDoubleBufferedLayer().

وفي المثال الذي يليه، تنتقل العملية إلى المخزن المؤقت الأمامي (

العرض) عندما يبدأ المستخدم الرسم على الشاشة (ACTION_DOWN) ويتحرك

مؤشر الماوس حول (ACTION_MOVE). تنتقل العملية إلى المورد الاحتياطي المزدوج

عندما يغادر المؤشر سطح الشاشة (ACTION_UP).

يمكنك استخدام

requestUnbufferedDispatch()

بأن يطلبوا من نظام الإدخال عدم تجميع أحداث الحركة، بل يقوم بدلاً من ذلك بتسليم

فور توفُّرها:

when (motionEvent.action) {

MotionEvent.ACTION_DOWN -> {

// Deliver input events as soon as they arrive.

view.requestUnbufferedDispatch(motionEvent)

// Pointer is in contact with the screen.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_MOVE -> {

// Pointer is moving.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_UP -> {

// Pointer is not in contact in the screen.

glFrontBufferRenderer.commit()

}

MotionEvent.CANCEL -> {

// Cancel front buffer; remove last motion set from the screen.

glFrontBufferRenderer.cancel()

}

}

عرض الإجراءات المطلوبة وغير المسموح بها

أجزاء صغيرة من الشاشة: الكتابة بخط اليد والرسم والرسم.

تحديث بملء الشاشة، العرض الشامل، والتكبير/التصغير. يمكن أن يؤدي إلى تمزيق.

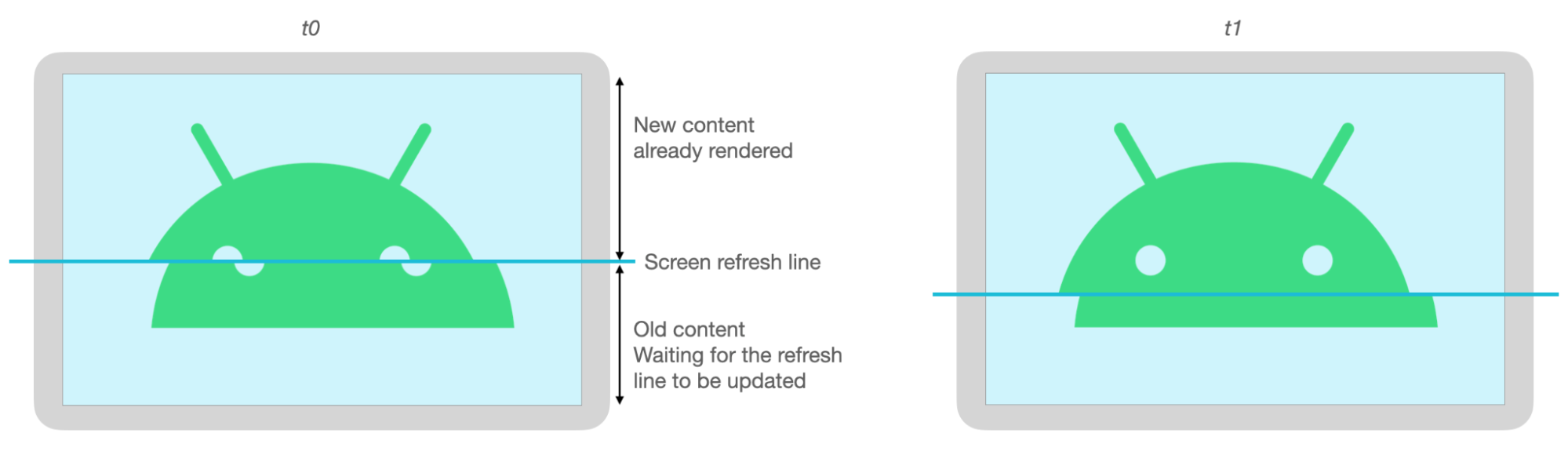

تمزيق

يحدث التمزق عندما يتم تحديث الشاشة أثناء استخدام المخزن المؤقت للشاشة تعديلها في نفس الوقت. يعرض جزء من الشاشة بيانات جديدة، بينما يعرض جزء آخر وتعرض البيانات القديمة.

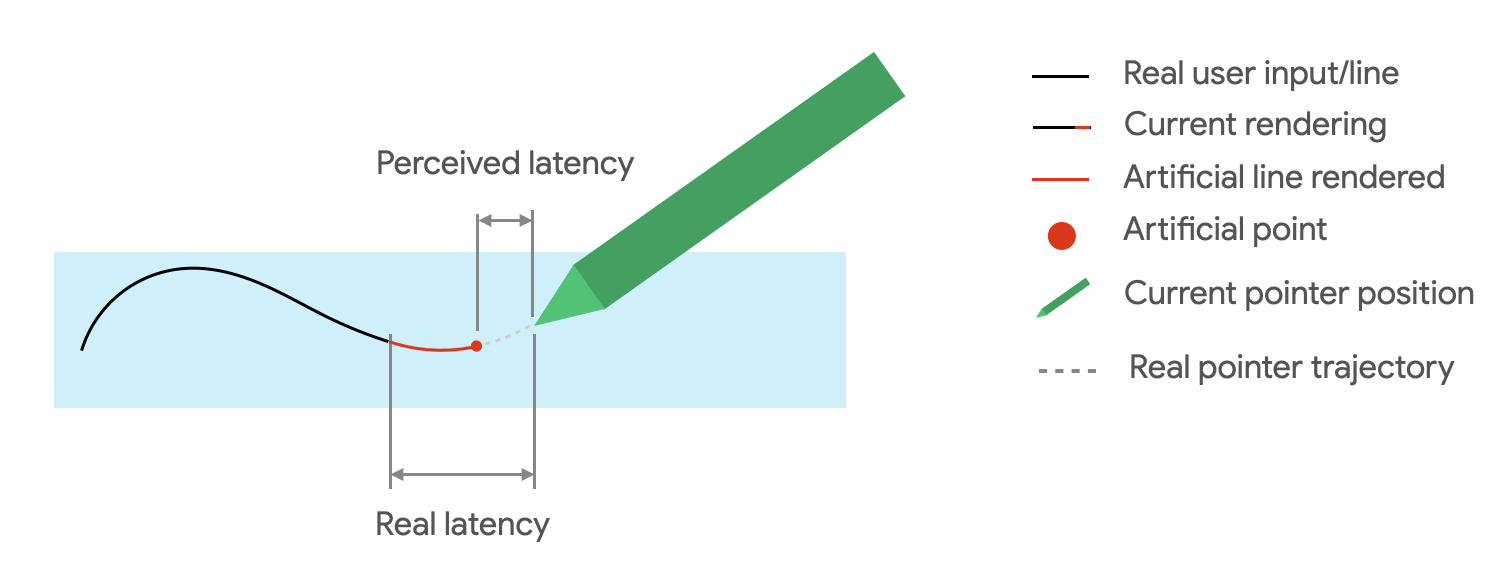

توقُّع الحركة

توقُّع حركة Jetpack المكتبة وقت الاستجابة الملاحظ من خلال تقدير مسار ضغط المستخدم وتقديم نقاط اصطناعية إلى العارض.

تحصل مكتبة توقّعات الحركة على إدخالات حقيقية من المستخدمين ككائنات MotionEvent.

تحتوي الكائنات على معلومات حول إحداثيات س و ص والضغط والوقت،

التي يتم استخدامها بواسطة مؤشّر الحركة لتوقُّع MotionEvent في المستقبل

الأخرى.

العناصر المتوقّعة البالغ عددها MotionEvent هي مجرد تقديرات. يمكن أن تقل الأحداث المتوقعة

وقت الاستجابة الذي تم رصده، ولكن يجب استبدال البيانات المتوقعة بـ MotionEvent.

البيانات بمجرد استلامها.

تتوفر مكتبة تنبؤات الحركة من Android 4.4 (المستوى 19 لواجهة برمجة التطبيقات) أعلى وعلى أجهزة ChromeOS التي تعمل بالإصدار 9 من نظام التشغيل Android (المستوى 28 من واجهة برمجة التطبيقات) والإصدارات الأحدث.

التبعيات

توفر مكتبة تنبؤات الحركة تنفيذ التنبؤ. تشير رسالة الأشكال البيانية

تمت إضافة مكتبة كملحق في ملف build.gradle الخاص بوحدة التطبيق:

dependencies {

implementation "androidx.input:input-motionprediction:1.0.0-beta01"

}

التنفيذ

وتتضمن مكتبة تنبؤات الحركة

MotionEventPredictor

التي تحدد الطرق التالية:

record(): تخزينMotionEventعناصر كسجلّ لإجراءات المستخدمpredict(): تعرض القيمة المتوقّعة لـMotionEvent

تعريف المثيل MotionEventPredictor

var motionEventPredictor = MotionEventPredictor.newInstance(view)

تزويد المتنبئ بالبيانات

motionEventPredictor.record(motionEvent)

التنبؤ

when (motionEvent.action) {

MotionEvent.ACTION_MOVE -> {

val predictedMotionEvent = motionEventPredictor?.predict()

if(predictedMotionEvent != null) {

// use predicted MotionEvent to inject a new artificial point

}

}

}

الإجراءات المحبّذة وغير المحبّذة في توقُّع الحركة

إزالة نقاط التوقّع عند إضافة نقطة متوقَّعة جديدة

ولا تستخدِم نقاط التوقّع للعرض النهائي.

تطبيقات تدوين الملاحظات

يسمح نظام التشغيل ChromeOS لتطبيقك بتعريف بعض إجراءات تدوين الملاحظات.

لتسجيل تطبيق كتطبيق لتدوين الملاحظات على نظام التشغيل ChromeOS، يُرجى الاطّلاع على المقالة إدخال .

لتسجيل تطبيق كتدوين ملاحظات على Android، يُرجى الاطّلاع على إنشاء تدوين ملاحظات. التطبيق.

Android 14 (المستوى 34)

ACTION_CREATE_NOTE

intent، تتيح لتطبيقك بدء نشاط لتدوين الملاحظات أثناء القفل

الشاشة.

التعرّف على الحبر الرقمي باستخدام مجموعة أدوات تعلُّم الآلة

باستخدام الحبر الرقمي لحزمة تعلّم الآلة والتقدير، يمكن لتطبيقك التعرّف على النص المكتوب بخط اليد على سطح رقمي في مئات من اللغات. يمكنك أيضًا تصنيف الرسومات.

توفر أدوات تعلّم الآلة

Ink.Stroke.Builder

فئة لإنشاء Ink عناصر يمكن معالجتها بواسطة نماذج تعلُّم الآلة

لتحويل الكتابة اليدوية إلى نص.

بالإضافة إلى التعرّف على الكتابة بخط اليد، يمكن للنموذج التعرّف على الإيماءات، مثل "حذف" و"دائرة".

راجع الحبر الرقمي التقدير لمعرفة المزيد.