Android 和 ChromeOS 提供了多种 API,可帮助您构建

为用户提供卓越的触控笔体验。通过

MotionEvent 类公开了

有关触控笔与屏幕交互的信息,包括触控笔压力

屏幕方向、倾斜度、悬停和手掌检测功能。低延迟图形和动态效果

预测库增强触控笔在屏幕上的渲染效果,

体验自然的纸笔画风

MotionEvent

MotionEvent 类表示用户输入互动,例如位置

以及触摸指针在屏幕上的移动对于触控笔输入,MotionEvent

还会显示压力、方向、倾斜度和悬停数据。

事件数据

如需访问触控笔 MotionEvent 对象,请将 pointerInteropFilter 修饰符添加到绘制 Surface。使用用于处理动作事件的方法实现 ViewModel 类;将该方法作为 pointerInteropFilter 修饰符的 onTouchEvent lambda 传递:

@Composable @OptIn(ExperimentalComposeUiApi::class) fun DrawArea(modifier: Modifier = Modifier) { Canvas( modifier = modifier .clipToBounds() .pointerInteropFilter { viewModel.processMotionEvent(it) } ) { // Drawing code here. } }

MotionEvent 对象提供与界面以下几方面相关的数据

事件:

- 操作:与设备进行物理互动——轻触屏幕、 将指针移到屏幕表面上,将指针悬停在屏幕上 表面

- 指针:与屏幕互动的对象的标识符,例如手指、 触控笔, 鼠标

- 轴:数据类型 - x 和 y 坐标、压力、倾斜度、方向 和悬停(距离)

操作

如需实现触控笔支持,您需要了解用户正在执行的操作 效果

MotionEvent 提供了多种用于定义运动的 ACTION 常量

事件。触控笔最重要的操作如下:

| 操作 | 说明 |

|---|---|

| ACTION_DOWN ACTION_POINTER_DOWN |

指针已与屏幕接触。 |

| ACTION_MOVE | 指针正在屏幕上移动。 |

| ACTION_UP ACTION_POINTER_UP |

指针与屏幕断开接触 |

| ACTION_CANCEL | 此时应取消之前或当前的动作集。 |

当 ACTION_DOWN 时,应用可以执行新的笔画等任务

会发生,使用 ACTION_MOVE, 绘制笔触,并在

触发了 ACTION_UP。

对于给定的给定事件,从 ACTION_DOWN 到 ACTION_UP 的一组 MotionEvent 操作

称为动作集

指针

大多数屏幕都是多点触控的:系统会为每根手指分配一个指针, 触控笔、鼠标或其他与屏幕互动的指向对象。指针 索引可让您获取特定指针的轴信息,例如 第一根手指或第二根手指接触屏幕的位置。

指针索引的范围是从零到

MotionEvent#pointerCount()

减 1。

您可以使用 getAxisValue(axis,

pointerIndex) 方法访问指针的轴值。

如果省略了触控点索引,系统会返回第一个

指针,指针零 (0)。

MotionEvent 对象包含所用指针类型的相关信息。您

可以通过迭代触控点索引并调用

该

getToolType(pointerIndex)

方法。

如需详细了解指针,请参阅处理多点触控 手势。

触控笔输入

您可以使用

TOOL_TYPE_STYLUS:

val isStylus = TOOL_TYPE_STYLUS == event.getToolType(pointerIndex)

触控笔还可以表明它被用作橡皮擦

TOOL_TYPE_ERASER:

val isEraser = TOOL_TYPE_ERASER == event.getToolType(pointerIndex)

触控笔轴数据

ACTION_DOWN 和 ACTION_MOVE 提供触控笔的轴数据,即 x 和

y 坐标、压力、方向、倾斜度和悬停距离。

为了使您能够访问这些数据,MotionEvent API 提供了

getAxisValue(int),

其中,参数是以下任意轴标识符:

| 轴 | getAxisValue() 的返回值 |

|---|---|

AXIS_X |

动作事件的 X 坐标。 |

AXIS_Y |

动作事件的 Y 坐标。 |

AXIS_PRESSURE |

对于触摸屏或触控板,值为手指、触控笔或其他指针施加的压力。对于鼠标或轨迹球,如果按下主按钮,则值为 1,否则为 0。 |

AXIS_ORIENTATION |

对于触摸屏或触控板,值为手指、触控笔或其他指针相对于设备垂直面的方向。 |

AXIS_TILT |

触控笔的倾斜度(以弧度为单位)。 |

AXIS_DISTANCE |

触控笔与屏幕之间的距离。 |

例如,MotionEvent.getAxisValue(AXIS_X) 会返回

第一个指针

另请参阅处理多点触控 手势。

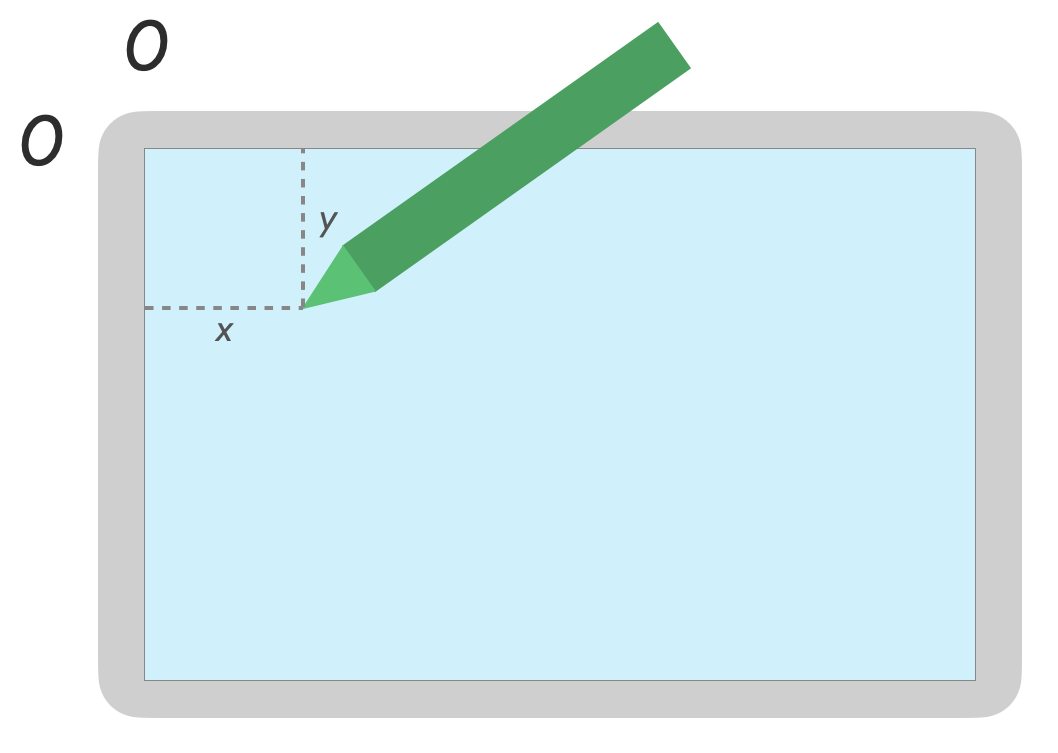

位置

您可以调用以下方法来检索指针的 x 坐标和 y 坐标:

MotionEvent#getAxisValue(AXIS_X)或MotionEvent#getX()MotionEvent#getAxisValue(AXIS_Y)或MotionEvent#getY()

压力

您可以使用

MotionEvent#getAxisValue(AXIS_PRESSURE);或者,对于第一个指针,

MotionEvent#getPressure()。

触摸屏或触控板的压力值介于 0(即 压力)和 1,但可以根据屏幕返回更大的值 校准。

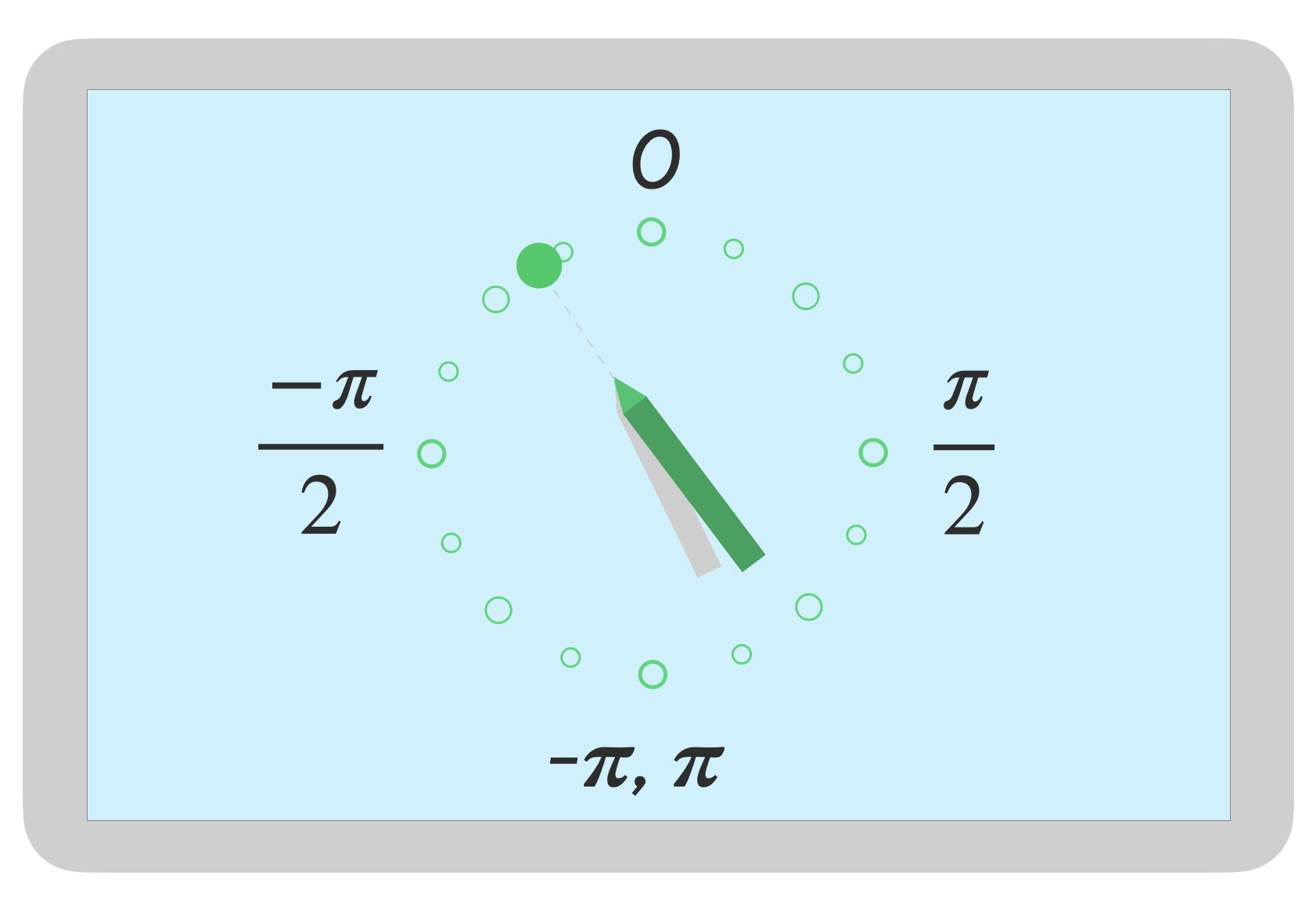

方向

方向表示触控笔所指方向。

您可以使用 getAxisValue(AXIS_ORIENTATION) 或

getOrientation()

(针对第一个指针)。

对于触控笔,返回方向为介于 0 到 pi (π) 之间的弧度值 或逆时针方向设为 0 到 -pi。

有了方向,您可以实现逼真的画笔体验。例如,如果 表示扁平画笔,扁平画笔的宽度取决于 触控笔方向。

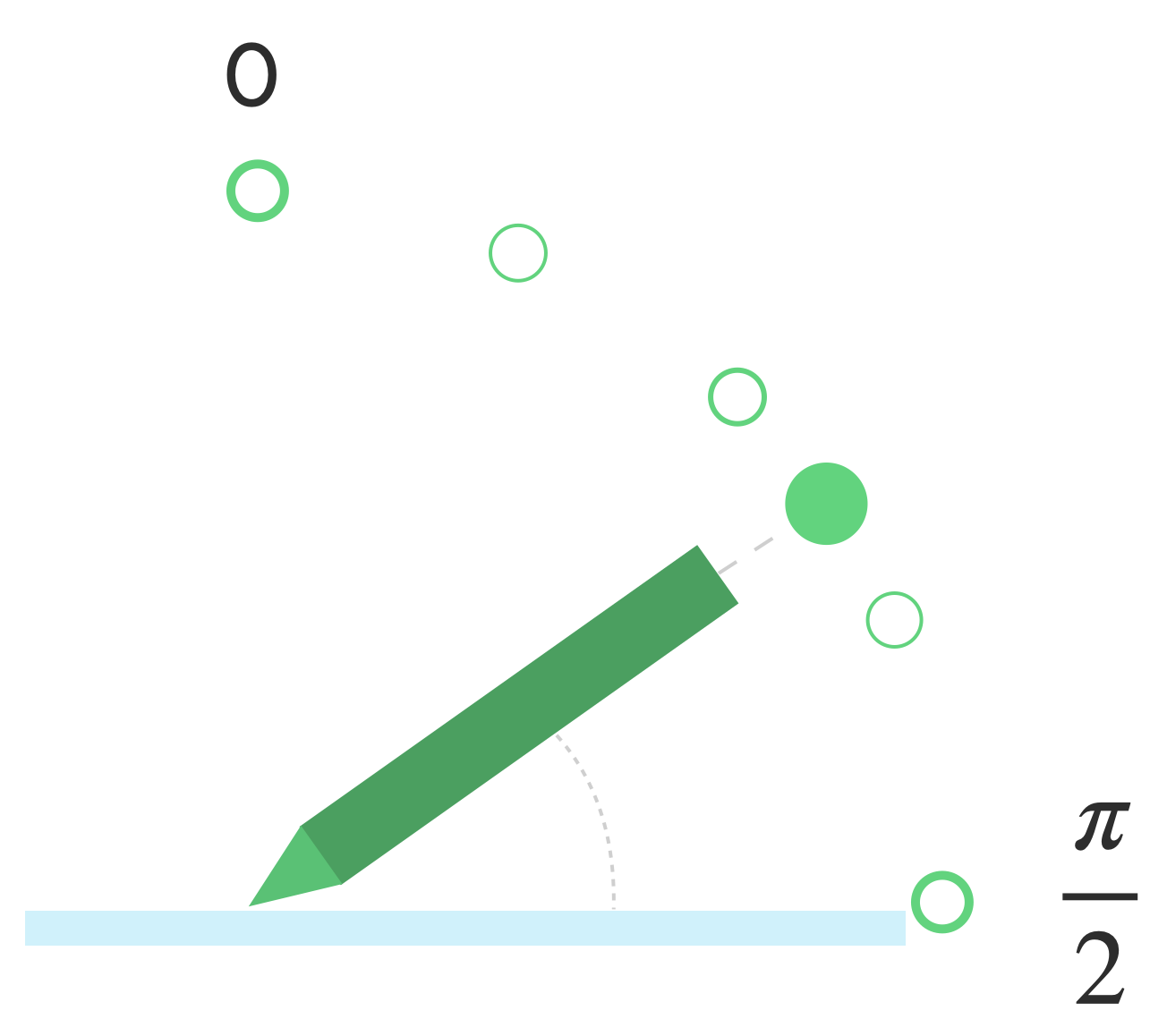

倾斜度

倾斜度是指触控笔相对于屏幕的倾斜度。

倾斜度会返回触控笔的正角度(以弧度表示),其中 0 为 与屏幕垂直,且 π/2 与屏幕平行。

可以使用 getAxisValue(AXIS_TILT) 检索倾斜角度(

第一个指针)。

倾斜度可用于重现尽可能贴近现实生活的工具, 并用倾斜的铅笔模仿阴影。

悬停

触控笔与屏幕之间的距离可通过

getAxisValue(AXIS_DISTANCE)。该方法会返回 0.0 中的值(

屏幕)调大的值。悬停

屏幕和触控笔头(点)之间的距离取决于

屏幕和触控笔的制造商。因为实施

动态变化,不要依赖于应用关键型功能的精确值。

触控笔悬停可用于预览画笔的大小,或指示 按钮将被选中

注意:Compose 提供的修饰符会影响界面元素的交互状态:

hoverable:将组件配置为可通过指针进入和退出事件悬停。indication:在发生互动时为此组件绘制视觉效果。

防手掌误触、导航和不必要的输入

有时,多点触控屏幕会注册不必要的轻触,例如,当

在手写时,为了支撑身体,用户会自然地将手放在屏幕上。

防手掌误触是一种检测此行为的机制,它会通知您

最后一组 MotionEvent 设置应取消。

因此,您必须保留用户输入的历史记录,避免不必要的轻触 可从屏幕中移除,而合法的用户输入 重新渲染。

ACTION_CANCEL 和 FLAG_CANCELED

ACTION_CANCEL和

FLAG_CANCELED是

两者都旨在通知您,之前的 MotionEvent 集应该

例如,您可以撤消上一条ACTION_DOWN取消的上一条

绘制应用程序的笔触。

ACTION_CANCEL

在 Android 1.0(API 级别 1)中添加

ACTION_CANCEL 表示应取消上一组动作事件。

当系统检测到以下任一情况时,就会触发 ACTION_CANCEL:

- 导航手势

- 防止手掌误触

触发 ACTION_CANCEL 后,您应使用

getPointerId(getActionIndex())。然后,从输入历史记录中移除使用该指针创建的笔触,并重新渲染场景。

FLAG_CANCELED

在 Android 13(API 级别 33)中添加

FLAG_CANCELED

表示指针上移是因用户无意间轻触所致。标记为

通常在用户不小心轻触屏幕时进行设置,例如抓握

或将手掌放在屏幕上。

您可以按如下方式访问标志值:

val cancel = (event.flags and FLAG_CANCELED) == FLAG_CANCELED

如果已设置此标志,则需要从上次的 MotionEvent 集

ACTION_DOWN。

与 ACTION_CANCEL 一样,可以通过 getPointerId(actionIndex) 找到指针。

MotionEvent 集。取消手掌轻触操作,并重新渲染展示效果。

全屏、无边框和导航手势

如果应用处于全屏状态,并且在屏幕边缘附近有可操作的元素(例如 只需从屏幕底部滑动 显示导航或将应用移至后台可能会导致 画布上会产生不必要的轻触

为了防止手势在应用中触发不必要的轻触,您可以执行以下操作:

使用边衬区和

ACTION_CANCEL。

另请参阅防手掌误触、导航和不必要的输入 部分。

使用

setSystemBarsBehavior()

方法和

BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

/

WindowInsetsController

以防止导航手势导致不必要的触摸事件:

// Configure the behavior of the hidden system bars.

windowInsetsController.systemBarsBehavior =

WindowInsetsControllerCompat.BEHAVIOR_SHOW_TRANSIENT_BARS_BY_SWIPE

如需详细了解边衬区和手势管理,请参阅:

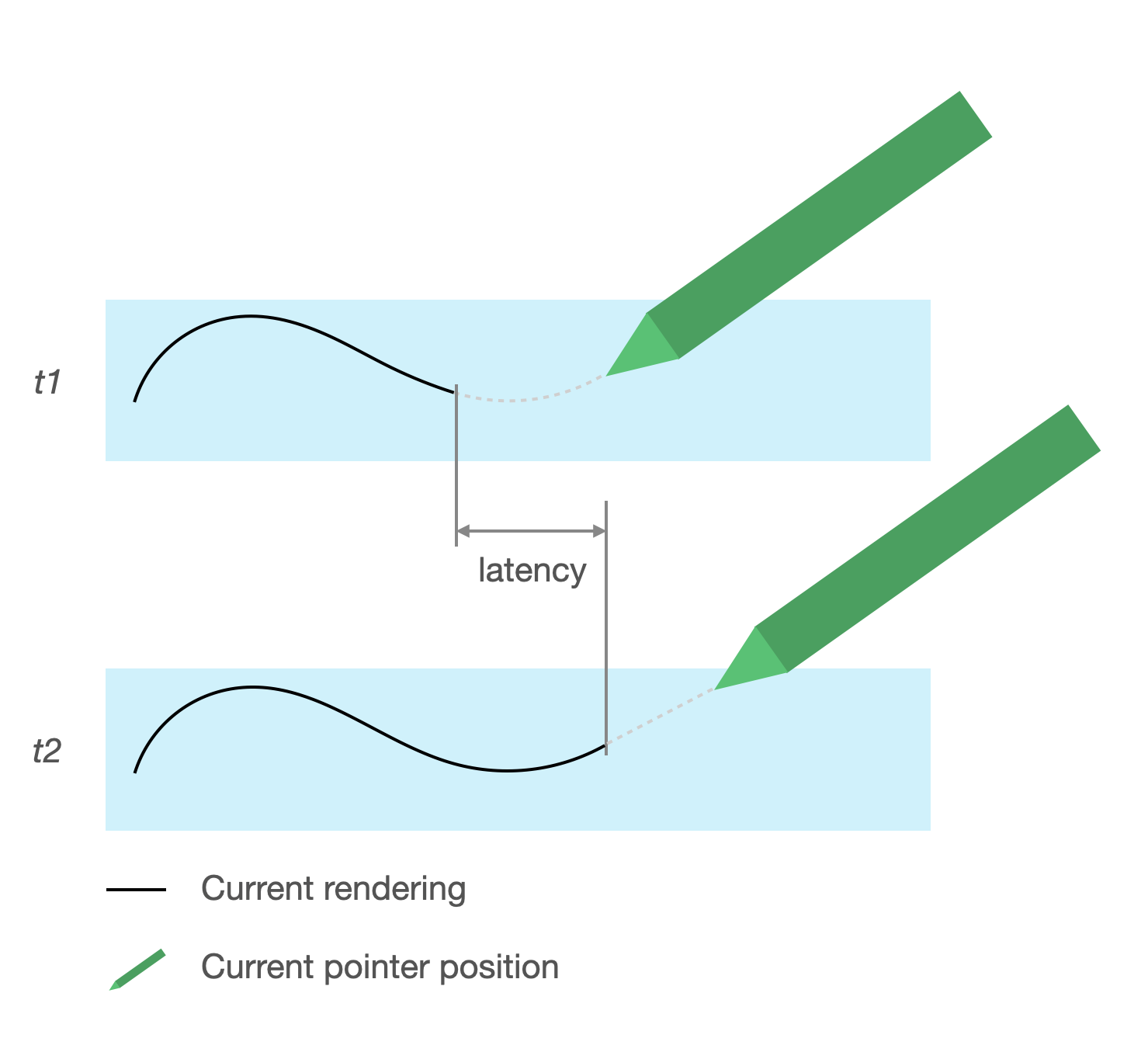

低延迟时间

延迟时间是指硬件、系统和应用进行处理所需的时间 并呈现用户输入

延迟时间 = 硬件和操作系统输入处理 + 应用处理 + 系统合成

- 硬件渲染

延迟来源

- 在触摸屏(硬件)上注册触控笔:初始无线连接 当触控笔和操作系统通信以注册和同步时。

- 触摸采样率(硬件):每台触摸屏每秒测量的次数 检查指针是否轻触了表面,范围从 60 Hz 到 1000 Hz。

- 输入处理(应用):应用颜色、图形效果和转换 。

- 图形渲染(操作系统 + 硬件):缓冲区交换、硬件处理。

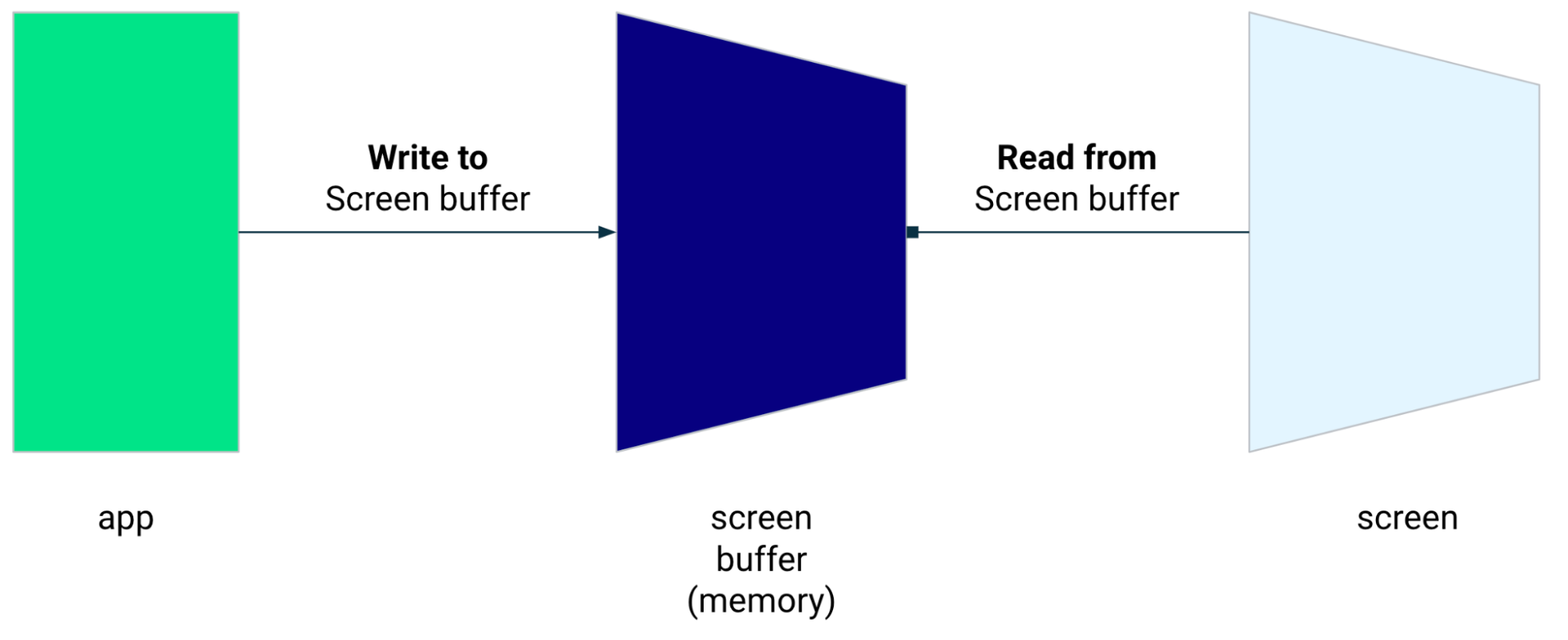

低延迟图形

Jetpack 低延迟图形库 缩短了从用户输入到屏幕呈现之间的处理时间。

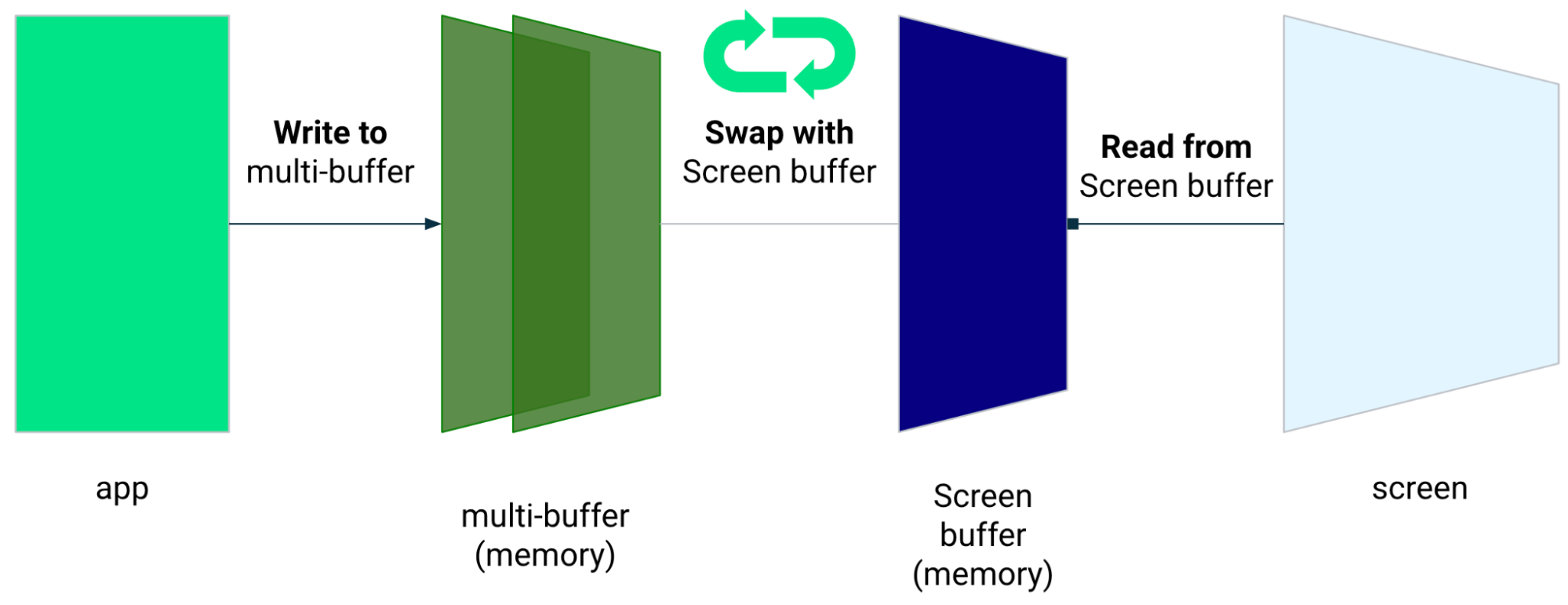

该库可避免多缓冲区渲染和 它利用前端缓冲区渲染技术,即直接将数据写入 屏幕上。

前端缓冲区渲染

前端缓冲区是用于屏幕渲染的内存区。它是距离 应用可以直接在屏幕上绘图低延迟库支持 应用来直接渲染到前端缓冲区。这提升了性能 阻止缓冲区交换,常规多缓冲区渲染可能会发生这种情况 或双缓冲区渲染(最常见的情况)。

虽然前端缓冲区渲染是在渲染小块区域 而不是用于刷新整个屏幕。包含 前端缓冲区渲染,应用会将内容渲染到一个缓冲区, 显示屏正在读取内容因此,就有可能呈现 出现工件或撕裂 的情况(请参阅下文)。

Android 10(API 级别 29)及更高版本提供低延迟库 以及搭载 Android 10(API 级别 29)及更高版本的 ChromeOS 设备。

依赖项

低延迟库提供了用于前端缓冲区渲染的组件

实施。将该库作为依赖项添加到应用的模块中

build.gradle 文件:

dependencies {

implementation "androidx.graphics:graphics-core:1.0.0-alpha03"

}

GLFrontBufferRenderer 回调

低延迟库包含

GLFrontBufferRenderer.Callback

接口,该接口定义了以下方法:

低延迟库并不偏向于您用于连接的数据的类型,

GLFrontBufferRenderer。

不过,该库会将数据作为包含数百个数据点的流进行处理; 因此,请设计数据来优化内存使用和分配。

回调

如需启用渲染回调,请实现 GLFrontBufferedRenderer.Callback 并

替换 onDrawFrontBufferedLayer() 和 onDrawDoubleBufferedLayer()。

GLFrontBufferedRenderer 最常使用回调来渲染您的数据

优化方式

val callback = object: GLFrontBufferedRenderer.Callback<DATA_TYPE> {

override fun onDrawFrontBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

param: DATA_TYPE

) {

// OpenGL for front buffer, short, affecting small area of the screen.

}

override fun onDrawMultiDoubleBufferedLayer(

eglManager: EGLManager,

bufferInfo: BufferInfo,

transform: FloatArray,

params: Collection<DATA_TYPE>

) {

// OpenGL full scene rendering.

}

}

声明一个 GLFrontBufferedRenderer 实例

通过提供 SurfaceView 和GLFrontBufferedRenderer

回调。GLFrontBufferedRenderer 会优化呈现

发送到前端缓冲区和双缓冲区:

var glFrontBufferRenderer = GLFrontBufferedRenderer<DATA_TYPE>(surfaceView, callbacks)

渲染

当您调用

renderFrontBufferedLayer()

方法,该方法会触发 onDrawFrontBufferedLayer() 回调。

当您调用

commit()

函数,该函数会触发 onDrawMultiDoubleBufferedLayer() 回调。

在以下示例中,进程渲染到前端缓冲区(快速

当用户开始在屏幕上绘图 (ACTION_DOWN) 并将屏幕移动到其他位置时

指针 (ACTION_MOVE)。该进程会渲染到双缓冲区

当指针离开屏幕表面 (ACTION_UP) 时触发。

您可以使用

requestUnbufferedDispatch()

要求输入系统不批量处理动作事件,而是传递

您可以:

when (motionEvent.action) {

MotionEvent.ACTION_DOWN -> {

// Deliver input events as soon as they arrive.

view.requestUnbufferedDispatch(motionEvent)

// Pointer is in contact with the screen.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_MOVE -> {

// Pointer is moving.

glFrontBufferRenderer.renderFrontBufferedLayer(DATA_TYPE)

}

MotionEvent.ACTION_UP -> {

// Pointer is not in contact in the screen.

glFrontBufferRenderer.commit()

}

MotionEvent.CANCEL -> {

// Cancel front buffer; remove last motion set from the screen.

glFrontBufferRenderer.cancel()

}

}

渲染注意事项

在屏幕的一小块区域内进行手写、绘图和素描。

全屏更新、平移、缩放。可能会导致画面撕裂。

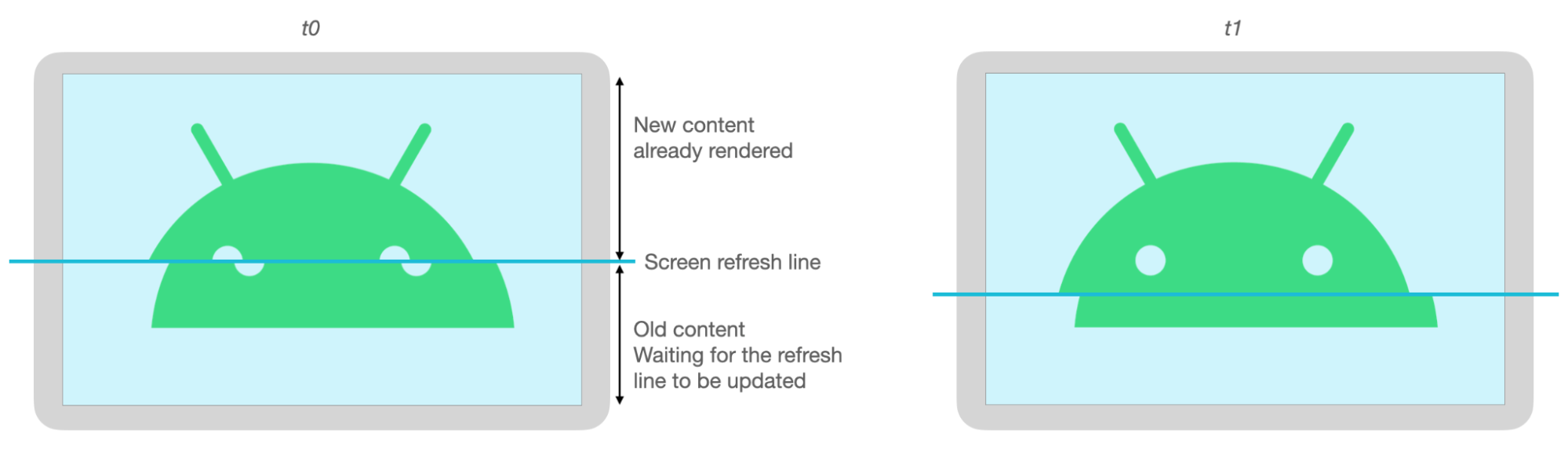

画面撕裂

屏幕刷新并同时缓冲屏幕缓冲区时,会发生画面撕裂 所有项目屏幕的一部分显示新数据,而另一部分 显示的是旧数据。

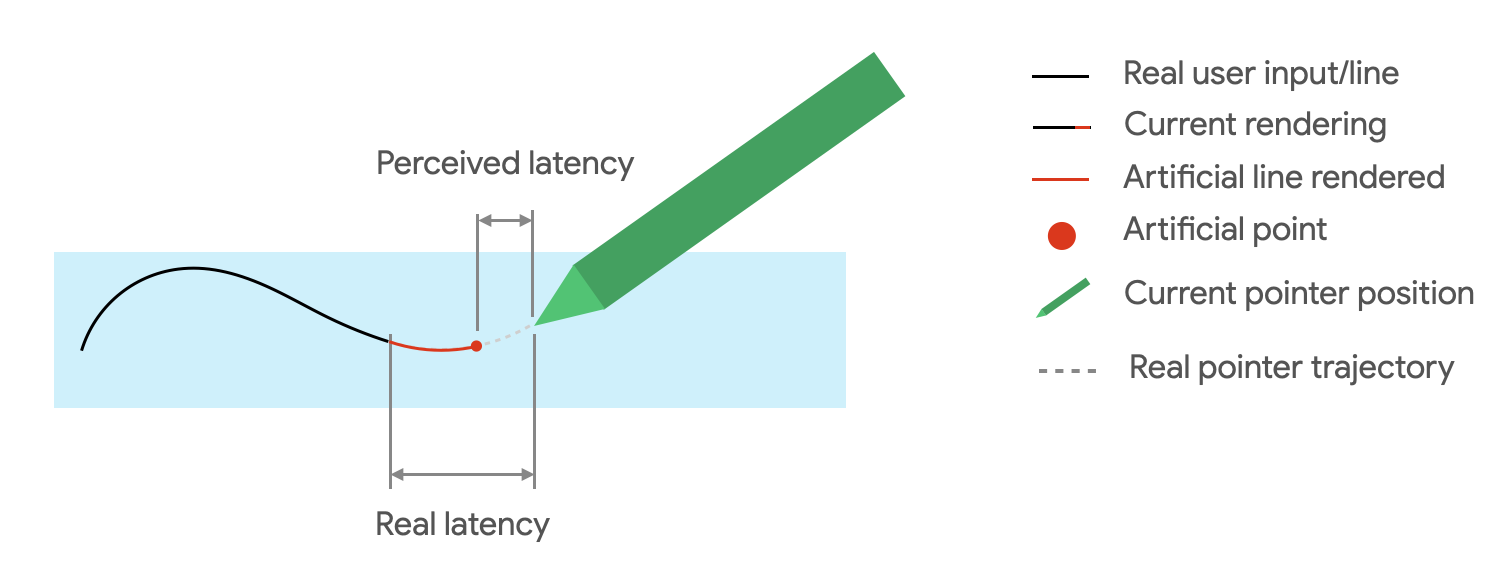

动作预测

Jetpack 动作预测 库 通过估计用户的笔触路径并提供临时的 指向渲染程序的人为。

动作预测库以 MotionEvent 对象的形式获取真实的用户输入。

这些对象包含有关 x 和 y 坐标、压力和时间的信息,

动作预测器利用这些特征来预测未来的MotionEvent

对象的操作。

预测的 MotionEvent 对象只是估算值。预测事件可以

已感知延迟时间,但预测数据必须替换为实际MotionEvent

数据。

动作预测库从 Android 4.4(API 级别 19)开始提供, 及搭载 Android 9(API 级别 28)及更高版本的 ChromeOS 设备。

依赖项

运动预测库提供了预测实现。通过

库将作为依赖项添加到应用的模块 build.gradle 文件中:

dependencies {

implementation "androidx.input:input-motionprediction:1.0.0-beta01"

}

实现

动作预测库包含

MotionEventPredictor

接口,该接口定义了以下方法:

声明一个 MotionEventPredictor 实例

var motionEventPredictor = MotionEventPredictor.newInstance(view)

向预测程序提供数据

motionEventPredictor.record(motionEvent)

预测

when (motionEvent.action) {

MotionEvent.ACTION_MOVE -> {

val predictedMotionEvent = motionEventPredictor?.predict()

if(predictedMotionEvent != null) {

// use predicted MotionEvent to inject a new artificial point

}

}

}

动作预测的注意事项

添加新的预测点后移除旧预测点。

请勿将预测点用于最终渲染。

记事应用

借助 ChromeOS,可以声明应用具有一些记事操作功能。

如需在 ChromeOS 上将应用注册为记事应用,请参阅输入 兼容性。

如需在 Android 上将应用注册为记事应用,请参阅创建记事 app。

Android 14(API 级别 34)引入了

ACTION_CREATE_NOTE

intent,使您的应用能够在锁具上启动记事 activity

屏幕。

利用机器学习套件进行数字手写识别

使用机器学习套件数字手写功能 识别, 您的应用可以识别数字表面上数百种 语言。您还可以对素描进行分类。

机器学习套件提供

Ink.Stroke.Builder

类来创建可由机器学习模型处理的 Ink 对象

将手写内容转换为文字。

除了手写识别之外,该模型还能够识别 手势、 例如删除和圈子

请参阅数字手写 识别 了解详情。